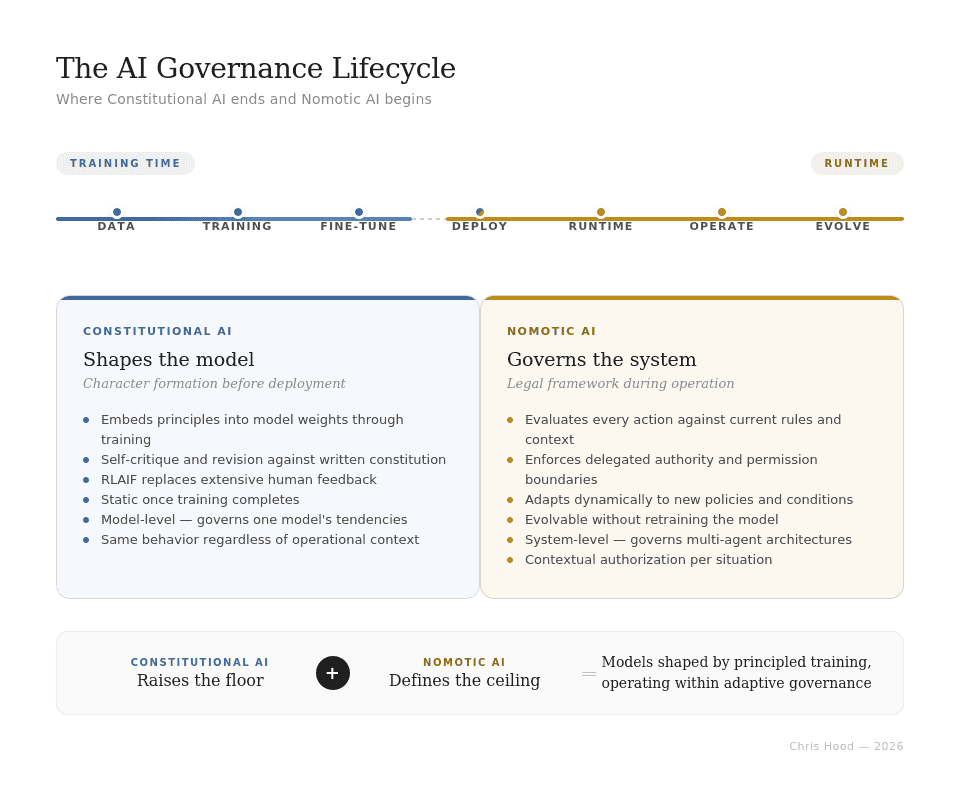

Constitutional AI and Nomotic AI: Full Governance Lifecycle

Both Constitutional AI and Nomotic AI use the word “governance.” Both invoke the idea of rules shaping AI behavior. Both respond to the same underlying anxiety: that capable AI systems need something beyond capability to function responsibly.

That is where the similarities stop.

Constitutional AI and Nomotic AI tackle different problems, at different points in the AI lifecycle, for different audiences, and with different results. Treating them as interchangeable, or assuming one can replace the other, misses the point of what each is meant to accomplish.

What Constitutional AI Actually Is

Constitutional AI is a training approach developed by Anthropic. The central idea is simple: rather than depending only on human feedback to teach a model what is harmful, you give the model a set of written principles, or what we call a constitution, and train it to judge its own outputs by those standards.

The process unfolds in two phases. First, the model responds to difficult prompts, critiques its own answers using the constitution, revises them, and is fine-tuned on these improved outputs. Then, in the next phase, the model compares its own responses in pairs, judging them by the constitution, and uses this to learn what is preferable. The outcome is a model that has absorbed behavioral boundaries before it ever interacts with a user.

Anthropic’s constitution draws from sources including the UN Declaration of Human Rights, trust and safety best practices, and principles from other research labs. The company has also experimented with publicly sourced constitutions, inviting roughly 1,000 members of the American public to contribute and vote on principles.

The main features of Constitutional AI are straightforward. It shapes the model during training, before deployment. It works on the model’s underlying tendencies, aiming to produce models that are helpful, harmless, and honest. Once training is finished, the constitution is part of the model itself. It cannot be changed or updated at runtime without retraining.

What Nomotic AI Actually Is

Nomotic AI is a way of thinking about governance as the essential balance to capability in AI systems. The word comes from the Greek nomos, meaning law or rule. If agentic AI is about what a system can do, Nomotic AI is about what a system should do, and under which rules.

Nomotic AI is not a training method (like Constitutional AI) or a particular product (like Claude). It is a category of AI (like agentic AI) that covers the rules, boundaries, and accountability structures that guide AI behavior as it operates in the real world.

The characteristics of Nomotic AI differ from those of training-time approaches. It delivers intelligent governance, using AI to understand context and intent. It stays dynamic, adjusting based on evidence and changing conditions. It operates at runtime, guiding actions during execution rather than only at deployment.

Evaluation remains contextual. Decisions consider situations, not just patterns. The system stays transparent, explainable, and auditable so outcomes can be understood and reviewed. Ethical grounding matters. Actions must be justifiable, not merely executable. Accountability anchors the model, with governance that traces back to human responsibility.

Nomotic AI rests on six core ideas: governance is part of the system’s design, not an afterthought; evaluation happens before, during, and after every action; authority is given, not assumed; trust is earned through evidence; actions must be justified on ethical grounds; and all governance ultimately leads back to human responsibility.

The Fundamental Distinction

The simplest way to see the difference is this: Constitutional AI shapes what a model is. Nomotic AI governs what a system does once it is in use.

Constitutional AI operates before the system meets a user. It bakes behavioral tendencies into the model through training. Once the model is deployed, the constitution is not a separate or modifiable layer. It is part of the model’s learned behavior. You cannot update a constitutional principle at 2 PM on a Tuesday because a new regulatory requirement emerged that morning. Changing the constitution requires retraining.

Nomotic AI operates after deployment, at runtime, in the moment of action. It evaluates whether a specific action, in a specific context, by a specific agent, is authorized, appropriate, and aligned with current governance requirements. It can adapt to new rules, new contexts, and new conditions without retraining the underlying model.

This is not about one being better than the other. Each addresses a different layer of the challenge.

Consider an analogy. Constitutional AI is like the education and moral formation a person receives as they grow up. It shapes character, instills values, and creates behavioral tendencies that persist throughout life. Nomotic AI is like the legal and institutional framework within which that person operates as an adult, the laws, regulations, professional codes, and organizational policies that govern specific actions in specific contexts. A well-educated person still needs laws. Good character does not eliminate the need for governance. Both layers are necessary. Neither is sufficient alone.

The Limits of Constitutional AI

Constitutional AI has limits. Nomotic AI is designed to address what remains.

Once training is complete, constitutional principles are fixed. They cannot be changed or extended as conditions shift, unless the model is retrained. In real-world settings where policies and requirements are always evolving, a training-only approach cannot keep up.

Model-level, not system-level. Constitutional AI governs a single model’s behavioral tendencies. But modern AI deployments involve multi-agent systems, tool chains, API integrations, and orchestration layers. Constitutional AI has no mechanism for governing interactions between agents, authority delegation between systems, or accountability chains across an architecture. It shapes one model. It does not govern a system.

No contextual authority. Constitutional AI applies the same principles regardless of context. The same model behaves the same way whether it is handling a routine customer inquiry or processing a sensitive financial transaction. Nomotic AI introduces contextual governance, the same agent, the same data, the same request, but different authorization based on context. A customer service agent might be authorized to issue a $50 refund automatically, but require human approval for a $500 refund. Constitutional AI has no mechanism for this distinction.

Constitutional AI encourages models to avoid harm, but it does not create records or logs that show who authorized what. It cannot answer the question of who made a decision, because the rules are built in during training, not tracked in real time. Nomotic AI, by contrast, makes governance visible and traceable.

No trust verification. Constitutional AI assumes that if a model is well-trained, it will behave well. There is no mechanism for ongoing trust calibration, for increasing or decreasing the scope of permitted actions based on observed behavior over time. Nomotic AI treats trust as something earned through evidence, not assumed through training.

Why Nomotic AI Needs Constitutional AI

The relationship goes both ways. Nomotic AI relies on well-trained models.

A model trained with Constitutional AI principles will exhibit fewer harmful behavioral tendencies, reducing the governance burden on the nomotic layer. A model that has internalized helpfulness, harmlessness, and honesty requires less runtime intervention than one that has not. Constitutional AI reduces the surface area of concern. Nomotic AI governs whatever remains.

To put it another way: Constitutional AI sets the floor. Nomotic AI sets the ceiling and the walls.

A well-trained model with no runtime governance will occasionally produce harmful outputs in edge cases that the constitution did not anticipate. A poorly trained model with poor runtime governance will be constantly constrained, leading to friction and inefficiency. The optimal architecture combines both models, shaped by principled training, and operates within runtime governance structures that adapt to context, enforce authority, and maintain accountability.

The Vocabulary Problem

Much of the confusion between Constitutional AI and Nomotic AI comes from shared language. Both use words like principles, rules, and governance, but they mean different things in each context.

In Constitutional AI, a “principle” is a training signal, a statement like “choose the response that is more helpful and harmless,” that shapes model behavior during the reinforcement learning phase. It becomes part of the model. It is not inspectable at runtime. It cannot be modified without retraining.

In Nomotic AI, a “principle” is an architectural commitment, a design decision that governance will be built in rather than bolted on, that authority will be delegated rather than assumed, that trust will be verified rather than presumed. These principles inform the design of governance systems, not the training of models.

In Constitutional AI, governance shapes the model’s character. In Nomotic AI, governance is about structuring the system’s authority. These are related, but they work at different levels and at different times.

The Complete Picture

AI governance needs both approaches, and a clear understanding of what each one offers.

Constitutional AI addresses the question: How do we build models that are dispositionally inclined toward beneficial behavior? This is a training problem, and CAI offers an elegant solution that reduces the need for extensive human feedback while producing models that are measurably less harmful.

Nomotic AI addresses the question: How do we govern deployed systems that operate in complex, dynamic, contextual environments where training alone cannot anticipate every situation? This is an architecture and operations problem, and Nomotic AI provides the conceptual framework and vocabulary for addressing it.

Anyone building or deploying AI needs to consider both. The model must be well-trained. The system must be well-governed. These are not the same, and using one as a stand-in for the other leaves gaps that neither can fill alone.

Constitutional AI gives AI a conscience. Nomotic AI gives it a legal framework. A person with a conscience but no laws may be admirable, but is not governed. A legal system without conscience is necessary, but not enough. It is the combination that creates trust, accountability, and effective action.

Shape models with Constitutional AI. Govern systems with Nomotic AI. Do not mistake one for the other.

If you find this content valuable, please share it with your network.

Follow me for daily insights.

Schedule a free call to start your AI Transformation.

Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller Infailible and Customer Transformation, and has been recognized as one of the Top 40 Global Gurus for Customer Experience. His latest book, Unmapping Customer Journeys, will be published in 2026.