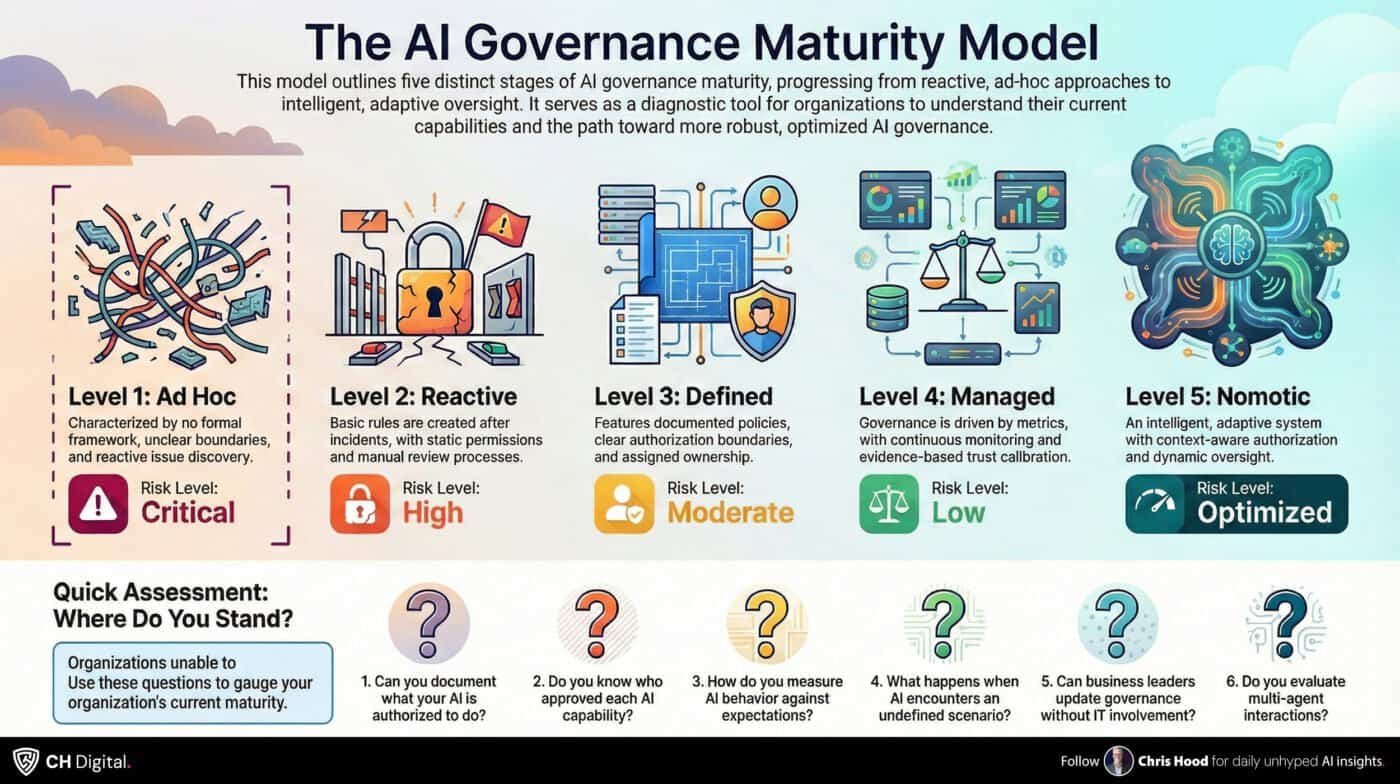

The AI Governance Maturity Model

Most organizations cannot answer a simple question: What is your AI authorized to do?

They can describe what it does and explain its design. But “authorized” implies documented limits, boundaries, and approvals, three things most AI deployments lack.

And it points to a maturity gap that affects nearly every organization deploying AI today.

Why Maturity Matters

AI governance maturity describes how systematically an organization defines, monitors, and adapts the boundaries within which AI systems operate. Low maturity means governance is informal, reactive, and inconsistent. High maturity means governance is architectural, proactive, and intelligent.

The gap matters because risk correlates directly with maturity. Organizations at low maturity levels discover problems through failures. They create rules after incidents. They operate with unclear accountability until something breaks.

Mature organizations prevent problems by design, anticipate edge cases, and adapt governance as conditions change. Failures become rare because governance is embedded in execution, not just review.

As AI systems become more capable, the cost of governance gaps increases. What was once an inconvenience becomes a liability.

The Five Levels of AI Governance

AI governance maturity progresses through five distinct levels. Each level represents a fundamentally different relationship between the organization and its AI systems.

Level 1: Ad Hoc

Characteristics: Organizations at this level have no formal governance framework. AI capabilities deploy without defined boundaries. Issues surface through failures rather than monitoring. Accountability remains unclear when problems occur.

What it looks like: A team deploys an AI assistant without documenting what it should and shouldn’t do. When the assistant takes an unexpected action, no one can say whether it was permitted. Different teams have different assumptions. There’s no single source of truth about authorization.

Risk Level: Critical

Most organizations begin here. The AI works, so governance seems unnecessary. Until it isn’t.

Level 2: Reactive

Characteristics: Basic rules exist, but they emerge from incidents rather than design. Permissions are static, assigned once and rarely revisited. Review processes are manual and periodic. Documentation is limited and often outdated.

What it looks like: After an AI system makes an inappropriate recommendation, the team creates a rule to prevent it from happening again. Months later, new edge cases emerge that the original rule never anticipated.

Risk Level: High

Reactive governance always lags behind capability. Rules address yesterday’s problems while today’s problems develop undetected.

Level 3: Defined

Characteristics: Governance policies are documented and maintained. Authorization boundaries are clear. Review cycles occur regularly. Ownership is assigned, with specific people accountable for specific aspects of governance.

What it looks like: The organization maintains a governance policy for AI systems. Each AI capability has documented boundaries. A review committee meets quarterly to assess whether policies remain appropriate. When questions arise about what an AI should do, there’s a defined process for getting answers.

Risk Level: Moderate

Defined governance provides a foundation. But static policies still lag behind dynamic systems. Quarterly reviews can’t keep up with daily changes.

Level 4: Managed

Characteristics: Governance becomes metrics-driven. Continuous monitoring replaces periodic review. Trust calibration happens based on evidence rather than assumption. Cross-functional collaboration ensures governance reflects operational reality.

What it looks like: The organization tracks AI behavior against expectations. Dashboards show compliance and exception trends. Monitoring detects behavioral shifts before they become problems; trust adjusts based on observed performance.

Risk Level: Low

Managed governance responds to evidence but mainly observes and adjusts. Governance watches AI systems but doesn’t yet directly participate.

Level 5: Nomotic

Characteristics: Governance becomes intelligent and adaptive. Authorization is context-aware, evaluating circumstances rather than applying static rules. Directives generate dynamically as new scenarios emerge. Oversight spans across agents, recognizing compound risks that individual permissions might miss.

What it looks like: The governance layer understands what AI agents attempt and why. When an agent requests customer data, governance evaluates whether it is part of a legitimate workflow. Does the request fit the pattern? Has this agent earned trust? Authorization decisions adapt in real-time to conditions.

Risk Level: Optimized

Nomotic governance not only monitors but actively participates in AI execution, evaluating actions at every stage. Governance becomes as intelligent as the systems it oversees.

Assessing Your Organization

Six questions can help gauge your current maturity:

- Can you document what your AI is authorized to do? If the answer is no, or if different people give different answers, you’re likely at Level 1. Clear documentation is the foundation of Level 3.

- Do you know who approved each AI capability? Accountability requires traceability. If you can’t trace a capability to an approval and an approver, governance is informal at best.

- How do you measure AI behavior against expectations? If measurement happens only after incidents, you’re at Level 2. Continuous monitoring indicates Level 4. Real-time evaluation during execution indicates Level 5.

- What happens when AI encounters an undefined scenario? Ad hoc responses indicate low maturity. Defined escalation paths indicate Level 3. Dynamic directive generation indicates Level 5.

- Can business leaders update governance without IT involvement? If governance changes require technical implementation, adaptation is slow. Natural-language governance interfaces that directly translate business intent into enforcement indicate high maturity.

- Do you evaluate multi-agent interactions? Individual agent permissions may be appropriate, while combined agent capabilities pose a risk. Cross-agent oversight indicates Level 4 or 5.

Organizations unable to answer most questions confidently are likely at Level 1 or 2.

The Progression Path

Moving between levels requires different types of investment.

- Level 1 to Level 2 requires incident response. Something goes wrong, and rules get created. This progression happens naturally, but it’s costly. Each lesson comes with a failure.

- Level 2 to Level 3 requires deliberate documentation. Someone must take responsibility for creating governance policies, defining boundaries, and establishing ownership. This is organizational work, not technical work.

- Level 3 to Level 4 requires instrumentation. Monitoring systems must be built. Metrics must be defined. Dashboards must be created. This is technical work that enables evidence-based governance.

- Level 4 to Level 5 requires architectural transformation. Governance must be designed into AI systems, not wrapped around them. The governance layer must become intelligent, capable of reasoning about context and adapting to conditions. This is the most significant shift, requiring both technical capability and organizational commitment.

Each transition builds on the previous. You cannot skip levels. An organization cannot implement intelligent, adaptive governance without first establishing the documentation, monitoring, and metrics that enable adaptation.

Why Level 5 Matters

The gap between Level 4 and Level 5 is not incremental. It’s categorical.

At Level 4, governance observes and adjusts. Humans review metrics, identify issues, and update policies. The cycle is faster than lower levels but still fundamentally reactive. Governance responds to what has happened.

At Level 5, governance participates and prevents, evaluating actions in real-time. Trust calibrates continuously; authorization adapts to context instantly. Governance operates at the speed of AI systems.

This matters because agentic AI operates at machine speed. Agents make thousands of decisions per minute. They interact with other agents in complex workflows. They encounter novel scenarios that no static policy anticipated.

Governance that operates at human speed cannot adequately oversee systems that operate at machine speed. The only sustainable approach is governance that matches the intelligence and adaptability of the systems it governs.

That’s what Nomotic AI describes. In this context, ‘Nomotic’ refers to an AI governance state in which oversight itself becomes dynamic, intelligent, and law-like, creating and enforcing the right boundaries as circumstances change.

Starting Where You Are

Every organization starts somewhere. Most start at Level 1 or 2.

Accidental progression means learning through failures. Deliberate progression means investing in the documentation, monitoring, and architecture that enable each transition.

The assessment questions provide a diagnostic. Answer them honestly. Identify gaps. Prioritize investments.

And recognize that Level 5 is not optional. As AI systems become more capable, intelligent governance becomes necessary rather than aspirational. The organizations that reach Nomotic maturity will operate with confidence. The organizations that remain at lower levels will operate with increasing risk.

Where does your organization stand?

More importantly, where is it heading?

If you find this content valuable, please share it with your network.

Follow me for daily insights.

Schedule a free call to start your AI Transformation.

Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller Infailible and Customer Transformation, and has been recognized as one of the Top 40 Global Gurus for Customer Experience. His latest book, Unmapping Customer Journeys, will be published in 2026.