Runtime vs. Retrospective: Why Traditional AI Governance Fails

The incident report was thorough. Seventeen pages documenting exactly what went wrong, when it happened, who was affected, and what the team learned. Root cause analysis. Remediation steps. Updated policies to prevent recurrence.

The report was also useless for the 3,000 customers whose data had already been exposed.

This is the fundamental limitation of traditional governance. It operates in the past tense. Something happens. You detect it. You review it. You remediate it. The cycle assumes that learning from failures prevents future failures. A retrospective. Lessons learned as they say.

The Traditional Model

Traditional governance follows a well-established pattern.

Policy documents define what should happen. Acceptable use policies. Data handling procedures. Authorization matrices. These documents represent intent, captured in prose and stored in repositories that employees may or may not read.

Risk registers catalog what could go wrong. Likelihood assessments. Impact ratings. Mitigation strategies. These registers represent awareness and are updated quarterly or when auditors ask.

Monitoring and detection watch for anomalies. Log aggregation. Alert thresholds. Dashboard visualizations. These systems represent vigilance, scanning for signals that something has deviated from expectations.

Post-incident review analyzes what did go wrong. Timelines. Root causes. Lessons learned. These reviews represent wisdom, extracted from failures and encoded into updated policies.

The model has internal logic. Define expectations. Identify risks. Watch for deviations. Learn from failures. Each component connects to the others. Organizations have refined this approach over decades of IT operations, compliance management, and risk governance.

The model also has a fatal flaw. It operates after execution.

Why After-the-Fact Fails

The traditional governance cycle assumes that detection precedes significant damage. You catch problems early, intervene quickly, and limit impact. The gap between occurrence and detection is small enough that remediation remains possible.

AI systems break this assumption.

Speed is the first problem. An AI agent can execute thousands of actions per minute. A human governance process that detects anomalies within an hour, which would be considered an excellent response time by traditional standards, allows millions of potentially problematic actions to complete. By the time detection occurs, the scale of the problem has already exceeded any reasonable remediation capacity.

Irreversibility is the second problem. Some actions cannot be undone. Data disclosed cannot be undisclosed. Commitments made cannot be unmade without consequence. Decisions communicated cannot be uncommunicated. The traditional model assumes that catching problems enables fixing them. When actions are irreversible, catching problems merely enables documenting them.

Compounding is the third problem. AI failures often cascade. One erroneous action triggers dependent actions. Those trigger further dependencies. A single flawed decision propagates through interconnected systems before any detection mechanism fires. The incident report will trace the cascade beautifully. It will not undo any of it.

Opacity is the fourth problem. Traditional detection relies on recognizable anomalies. Unusual volumes. Unexpected patterns. Known failure signatures. AI systems can fail in ways that look normal. The agent processes transactions exactly as designed. The transactions themselves are inappropriate, but nothing in the telemetry reveals it. Detection mechanisms optimized for obvious failures miss subtle ones entirely.

The traditional model asks: What happened, and how do we respond?

The necessary question is: What is happening, and should it continue?

Runtime Enforcement

Runtime governance operates on a different temporal model. Instead of reviewing completed actions, it evaluates actions before they are completed.

The distinction is architectural, not procedural. Traditional governance wraps around systems from outside. Policies constrain configuration. Monitoring observes behavior. Reviews assess outcomes. The system operates, and governance evaluates it externally.

Runtime governance integrates into execution itself. Before an action completes, the governance layer evaluates whether it should. The agent does not act and awaits judgment. The agent cannot act without judgment.

This changes what governance can accomplish.

Prevention replaces remediation. Problems are blocked before they occur rather than fixed after they occur. The 3,000 customer data exposures did not happen, not because the incident report was excellent, but because the governance layer prevented the exposure before it could be completed.

Speed matches speed. Governance that operates at runtime can evaluate at machine speed. The gap between action and evaluation collapses to zero. There is no window during which the agent acts without oversight.

Context becomes available. Runtime governance observes what the agent is attempting, why it is attempting it, and the system’s state at the moment of action. Post-execution review reconstructs this context from logs and artifacts. Runtime governance experiences it directly.

Intervention becomes possible. When evaluation reveals a problem, the action can be halted, modified, or escalated before completion. The governance layer does not merely record that something went wrong. It prevents the wrong thing from completing.

Memory Protection and Agent-to-Agent Authorization

Runtime governance addresses challenges that traditional models cannot even see.

Memory protection is one such challenge. Agentic AI systems maintain context across interactions. They remember what happened earlier in a conversation, what tools they have used, and what data they have accessed. This memory can be manipulated. Prompt injection attacks attempt to corrupt agent memory, inserting false context or malicious instructions.

Traditional governance has no answer to memory corruption. It cannot see the memory. It reviews inputs and outputs, not the internal state between them. An agent with corrupted memory will produce inappropriate outputs from apparently appropriate inputs. The logs will show nothing wrong. The governance will detect nothing. The damage will proceed.

Runtime governance can protect memory directly. It can verify memory integrity before each action. It can detect when context has been manipulated. It can intervene when an agent’s internal state no longer reflects legitimate operation.

Agent-to-agent authorization is another challenge. Modern AI deployments involve multiple agents that interact with each other. Agent A requests information from Agent B. Agent B triggers a workflow involving Agent C. The chain of interactions creates compound capabilities that no single agent possesses.

Traditional governance evaluates agents independently. Each agent has its permissions. Each agent has its boundaries. But the combination of individually authorized actions can produce collectively unauthorized outcomes. Agent A is allowed to query customer data. Agent B is allowed to write to external systems. Together, they can exfiltrate customer data to external systems, even though neither is individually authorized to do so.

Runtime governance can evaluate these chains as they form. It can recognize when a sequence of authorized actions produces an unauthorized outcome. It can intervene at the point where the chain becomes problematic, not after the final action completes.

The Non-Human Identity Problem

Traditional governance was designed for human actors.

Access control assumes a person requests access. Authentication verifies that the person is who they claim to be. Authorization determines what a person is permitted to do. Audit trails record what that person actually did. The entire model centers on human identity as the unit of accountability.

AI agents are not human. When an agent requests data, executes a transaction, or calls another service, who is acting? The agent itself? The user who initiated the workflow? The developer who built the agent? The organization that deployed it?

Traditional identity models have no good answer. They assign agents service accounts designed for automated processes, not autonomous decision-makers. They grant permissions based on technical requirements, not contextual appropriateness. They log actions without capturing the reasoning that drove them.

The result is an accountability gap. The agent takes actions. The actions have consequences. But tracing those consequences back to responsible parties requires forensic reconstruction that traditional governance cannot provide.

Runtime governance addresses non-human identity directly. It evaluates agent actions in context, not just against permission tables. It maintains accountability chains that connect agent behavior to the humans who authorized it. It distinguishes between actions the agent is technically capable of performing and those it should take given its current purpose and state.

The agent is not a user with permissions. It is an actor with authority, authority that must be evaluated at each action, not granted once and assumed thereafter.

Nomotic as Runtime Governance

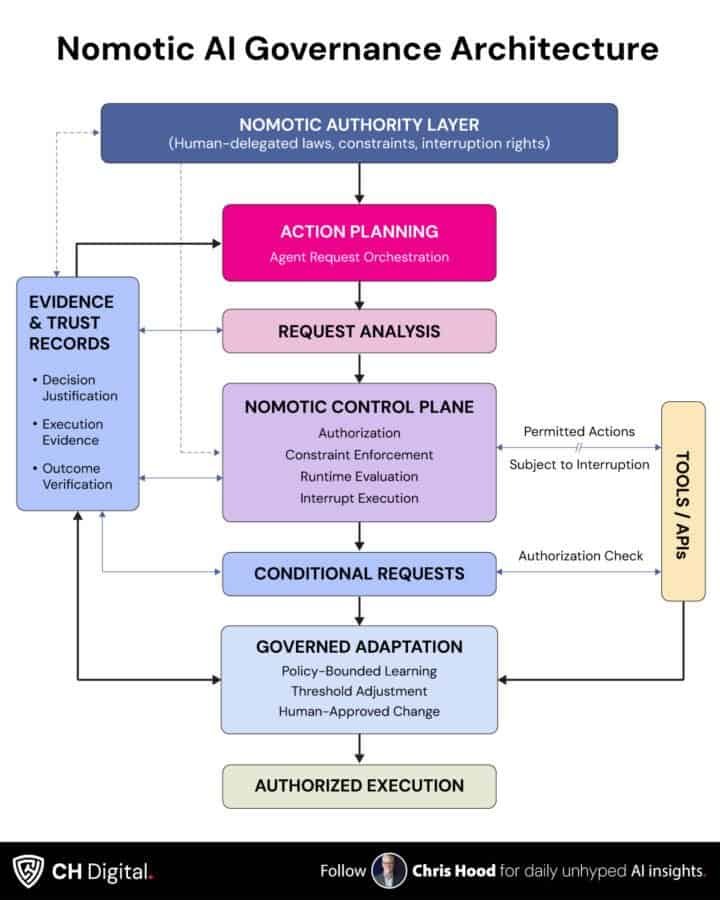

Nomotic AI is runtime governance by design.

The four functions of Nomotic governance, govern, authorize, trust, and evaluate, operate continuously throughout execution. Not before deployment. Not after incidents. During action.

Govern means rules are active, not archived. The boundaries that constrain agent behavior are enforced at runtime, consulted before each action, and updated as circumstances change.

Authorize means permission is evaluated, not assumed. Each action passes through authorization that considers the current context, not just static configuration. Permission granted yesterday does not automatically apply today.

Trust means reliability is verified, not merely hoped for. The agent’s behavior is continuously compared against expectations. Deviations trigger scrutiny. Consistency earns expanded authority. Trust is dynamic because runtime governance observes behavior as it happens.

Evaluate means impact is assessed in the present, not reconstructed from the past. Actions are evaluated against purpose, ethics, and appropriateness, while they can still be influenced.

This is the temporal shift that traditional governance cannot make. Traditional governance looks backward. What happened? What went wrong? What do we learn? These questions have value, but they cannot prevent.

Nomotic governance looks at the present moment. What is happening? Should it continue? Is this action appropriate, given everything we know right now? These questions enable prevention because they are asked while prevention is still possible.

The seventeen-page incident report is excellent documentation. It represents organizational learning. It will inform future policy.

It will not help the 3,000 customers whose data was already exposed.

Runtime governance might have. That is the difference that matters.

If you find this content valuable, please share it with your network.

Follow me for daily insights.

Schedule a free call to start your AI Transformation.

Book me to speak at your next event.

Chris Hoodis an AI strategist and author of the #1 Amazon Best Seller Infailible and Customer Transformation, and has been recognized as one of the Top 40 Global Gurus for Customer Experience. His latest book, Unmapping Customer Journeys, will be published in 2026.