Contact Center AI Governance by Design, Not by Reaction

Contact centers were among the first enterprise functions to deploy agentic AI at scale. The appeal was obvious. Automate routine inquiries, reduce wait times, free human agents for complex issues, and operate around the clock without fatigue.

The technology was delivered. AI agents now resolve millions of customer interactions every day across industries. They process refunds, answer product questions, update account information, and route complex issues to human representatives.

Another outcome emerged alongside those capabilities: governance chaos.

The Hidden Governance Problem

Most contact center AI operates under hidden constraints. Vendor defaults. System prompts were written during implementation and never revisited. Escalation rules are buried in configuration files. Knowledge bases that drift out of sync with products and policies.

This is not ungoverned AI. It is accidentally governed AI, which creates a larger risk.

Accidental governance has no owner. No one reviews it. No one knows whether it still fits the current business reality. The AI continues operating under constraints that made sense months ago but no longer align with today’s policies, products, or priorities.

A common objection to formal governance frameworks argues that they add supervisory layers, increasing complexity and opacity. More steps. More oversight. More friction.

That objection flips the problem upside down.

Opacity already exists. It lives in the hidden steps no one examines. Prompt templates instruct the AI to “be helpful and friendly” without defining boundaries. Permission settings accumulate without review. Escalation logic lives in the head of one engineer who never documented it.

Governance frameworks do not create opacity. They expose what already hides beneath the surface.

Governance Inside vs. Outside the System

Another objection claims that true alignment comes from AI that can question its own knowledge, intent, and authority. Governance wrapped around intelligence only scales compliance. Governance embedded inside the system scales responsibility.

The distinction sounds elegant. The framing is misleading.

An AI agent that questions its own authority still operates within constraints defined by someone. Training data, system prompts, reinforcement methods, and architectural choices shape how that questioning occurs. Someone designed those mechanisms.

That is still governance. It is internalized governance. Internalized governance without ownership or review simply replaces visible rules with invisible ones.

Consider a contact center AI trained to be “helpful.” Helpful according to whom? Helpful within what boundaries? Helpful at what cost to accuracy, compliance, or organizational risk?

When helpfulness lacks boundaries, agents overpromise, disclose information they should not share, or approve requests beyond their authority. They do so while following the value they were trained to prioritize.

Governance does not oppose responsibility. Governance defines responsibility in operational terms.

The Drift Problem

Contact centers face a specific challenge: drift.

Products evolve. Policies change. Promotions launch and expire. Organizational priorities shift. Knowledge that was accurate at deployment becomes inaccurate over time, sometimes quickly.

Static governance cannot keep pace with drift. Rules written once and applied uniformly lose relevance as reality changes. The AI keeps operating inside outdated constraints while the business moves on.

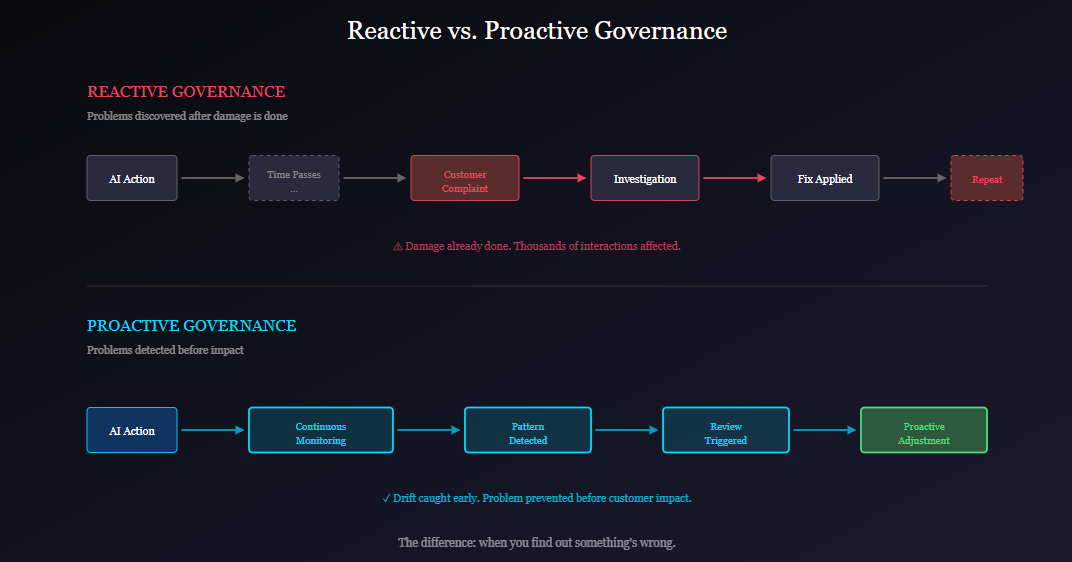

Here, the distinction between reactive and proactive governance matters.

Reactive governance looks backward. Teams review what the AI did and respond after issues surface. Audits, customer complaints, and compliance reviews catch problems late. By the time detection occurs, the AI has already handled thousands of interactions using outdated information or inappropriate boundaries.

Proactive governance, what Nomotic AI calls the Trust and Evaluate functions, operates continuously. Systems compare behavioral patterns against baselines. Drift surfaces immediately, not months later in a quarterly review.

Consider a contact center AI authorized to issue refunds up to $500. That limit defines an authorization boundary. Intelligent governance also monitors patterns. Sudden spikes in refund requests. Average refund values are creeping toward the limit. Unusual refund volume tied to a specific product. These signals trigger reviews before they become systemic issues.

Drift detection does not add supervision. It replaces assumptions with visibility.

What Governance by Design Looks Like

In a contact center context, governance by design starts before deployment, not after failure.

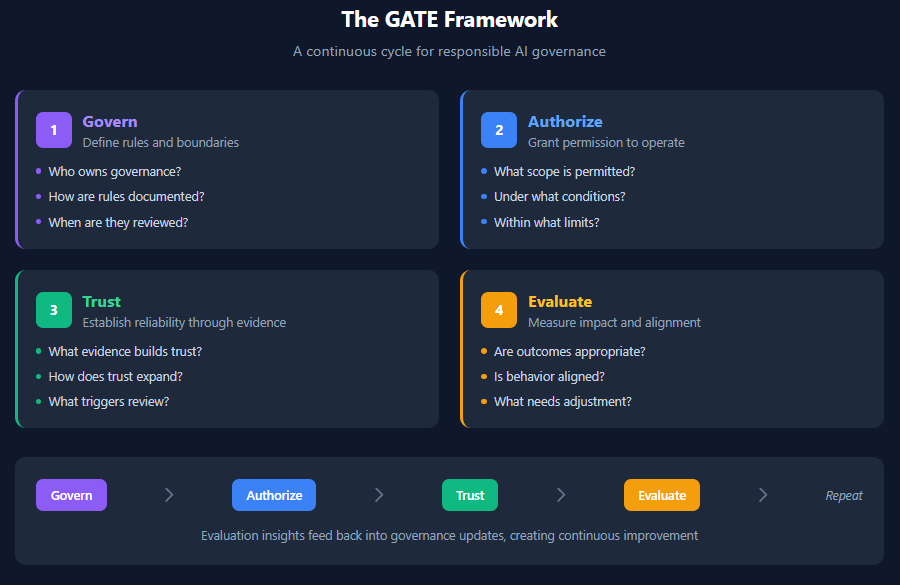

Govern: What rules define this AI’s behavior? Who owns those rules? How updates occur when products, policies, or priorities change. When a seasonal promotion ends, who ensures the AI stops mentioning it?

Authorize: What actions can the AI take? Refund limits. Data access. Commitments the system can make. When a customer requests an exception, which actions remain within AI authority and which require a human decision?

Trust: What tasks can teams rely on the AI to perform correctly? How reliability is measured over time. When accuracy degrades, and it will, how detection occurs. What baseline defines acceptable performance, and what deviation triggers review?

Evaluate: Whether outcomes align with intent. Not only accuracy, but fairness, consistency, and alignment with customer treatment standards. When the AI resolves an interaction, does the outcome benefit both the customer and the organization?

Each question has an answer. The real issue is whether those answers remain explicit and owned or become implicit and orphaned.

Transparency as an Operating Advantage

Nomotic AI does not aim to add bureaucratic overhead to contact center operations. It aims to make existing governance visible.

Every contact center AI already operates under constraints. The difference lies in whether those constraints are:

- Intentional or accidental

- Explicit or implicit

- Owned or orphaned

- Evolvable or static

Governance by design means teams deliberately choose constraints, document them clearly, assign ownership, and update them as conditions change.

Governance by reaction allows constraints to accumulate quietly, hide in configurations, belong to no one, and drift until failure forces attention.

Contact centers that treat governance as architectural rather than corrective operate with more clarity, not less. Teams understand what their AI can do. They can explain why it behaves as it does. They detect drift before customers experience it.

Opacity decreases when governance becomes visible.

You cannot govern what you cannot see. Nomotic AI brings governance into view.

If you find this content valuable, please share it with your network.

Follow me for daily insights.

Schedule a free call to start your AI Transformation.

Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller Infailible and Customer Transformation, and has been recognized as one of the Top 40 Global Gurus for Customer Experience. His latest book, Unmapping Customer Journeys, will be published in April 2026.