Where AI Strategy Meets Human Reality.

I help executives lead transformation by aligning AI strategy, teams, and technology, designing agentic workflows that protect trust, reduce risk, and deliver scalable outcomes while remaining accountable to customers.

Start with Customer Success: Deploy my proven 10-step framework to transform CS from cost center to revenue engine, driving retention, expansion, and measurable advocacy.

-

Helping business leaders drive AI transformation.

Trusted by Leaders at

Get a Head Start.

Sign up for my newsletter and receive the introduction to my latest book for free. Then receive daily insights about the world of AI, along with exclusive content that will help you begin your AI journey.

AI Transformation

Programs for Leaders.

AI Keynote Speaking

Keynotes that cut through hype and give leaders a clear AI strategy. Expect real use cases, actionable frameworks, and a call to act the same day.

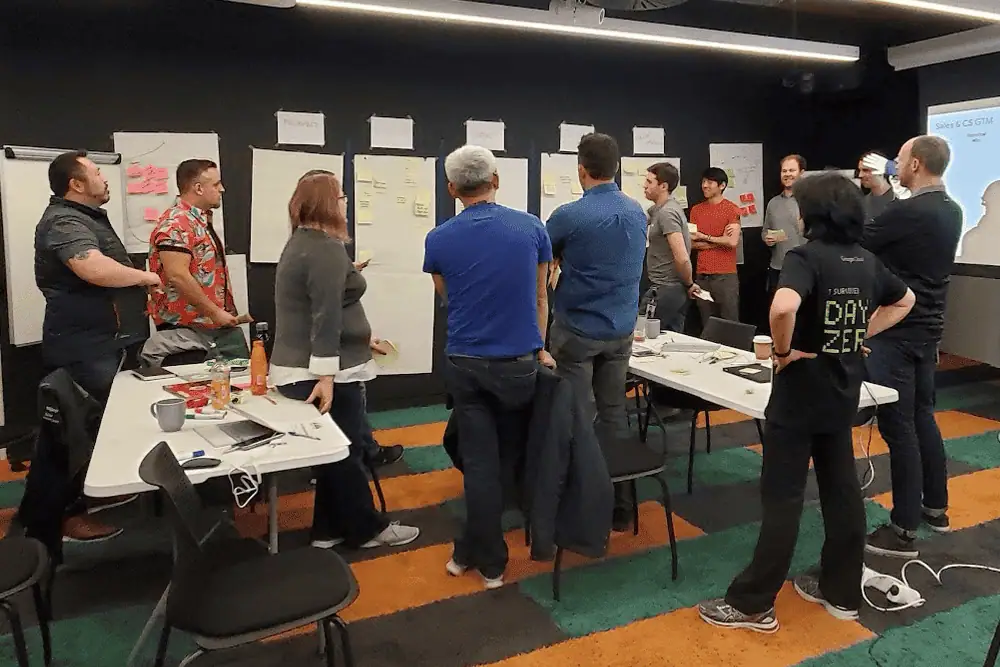

AI Strategic Workshops

Align executives on priorities, map agentic workflows, and leave with a 90 day AI roadmap. Lock in guardrails, roles, and first pilots ready to run.

AI Executive Advisory

Partner with me to pick high impact use cases, design governance, guide vendor choices, and build the cadence that ships outcomes and reduces risk.

Hands on Support for

AI Strategy & Customer Transformation.

Build practical skills in agentic design, AI governance, agent implementation, and customer transformation. Your team will identify high-impact use cases, design task-focused workflows, set guardrails, and define clear metrics that tie to outcomes. Formats include a one-day intensive or a four-week cohort, delivered in person or live online.

As an AI Keynote Speaker, I also bring these principles to the stage, helping leaders connect strategy to action and sparking momentum that continues into bootcamps and workshops. Each program ends with concrete deliverables: a 90-day roadmap, a governance checklist, an agent design canvas, and a KPI scorecard. Executives, product, data, and operations leaders leave aligned and ready to launch pilots that scale.

Pick up a copy of my books.

What clients have to say.

Chris is a great speaker and really polished. If you are looking for someone who knows their stuff, is easy to work with, and that your audience will love; He is your ideal guest for impactful sessions and workshops!

Bill Carlin

COO

Chris Hood’s presentation was brilliantly insightful and engaging. His insights were sharp and refreshing, making for a standout episode. Always a pleasure hearing from pros like him who deliver actionable strategies.

Cash Miller

CEO

Chris has extensive experience working at a top tech company (Google). He has extensive knowledge about customers experience, and the impact of artificial intelligence. I highly recommend him.

Dr. Christopher

CEO

Chris is the true definition of a thought leader and at the root of it, a leader. He leads with empathy, vision, knowledge and authenticity. He is a true asset to everyone that has the pleasure to work alongside him.

Lauren M.

Marketing Manager

Latest Articles.

Why Epistemic Humility is the Survival Skill of 2026

What if AI Actually Became Autonomous?

Autonomy in AI is About Governance, Not System Automation

The AI Propaganda Machine

Five AIs Delivering Value While You’re Distracted by Agentic

Latest Episodes.

Meet Chris

Chris Hood is an AI keynote speaker, strategist, and author with 35 years in customer transformation. He helps executives lead AI initiatives with a customer-centric approach, rigorous governance, and measurable outcomes. He partners with organizations to align teams and technology, design agentic workflows, and accelerate adoption while reducing risk. He teaches at Southern New Hampshire University.

Read More