Why LLMs Hallucinate, Entice, and Can’t Answer Why

Large Language Models (LLMs) like ChatGPT seem “intelligent.” They write essays, solve code, summarize dense material, and respond with confidence and fluency. But their intelligence is a surface trick. We often assume that what sounds smart must be true, and that is where the illusion begins.

Ask an LLM, “Why did you say that?” and you will still get a response. It might sound thoughtful. But it is not based on reasoning or intent. The model does not understand the question or its own answer. It is simply calculating the most likely words to follow, based on patterns learned from data.

On top of that, it flatters you. It mirrors your tone, reinforces your beliefs, and aligns with your assumptions. This is not because it is correct. It happens because the system is trained to reward what users prefer to hear. The more you interact, the more it reflects you.

This is not harmless. It boosts your confidence in what may be incorrect, while reducing your ability to challenge or reflect. The machine does not think, but it convinces you that both of you are thinking together.

What Large Language Models Do

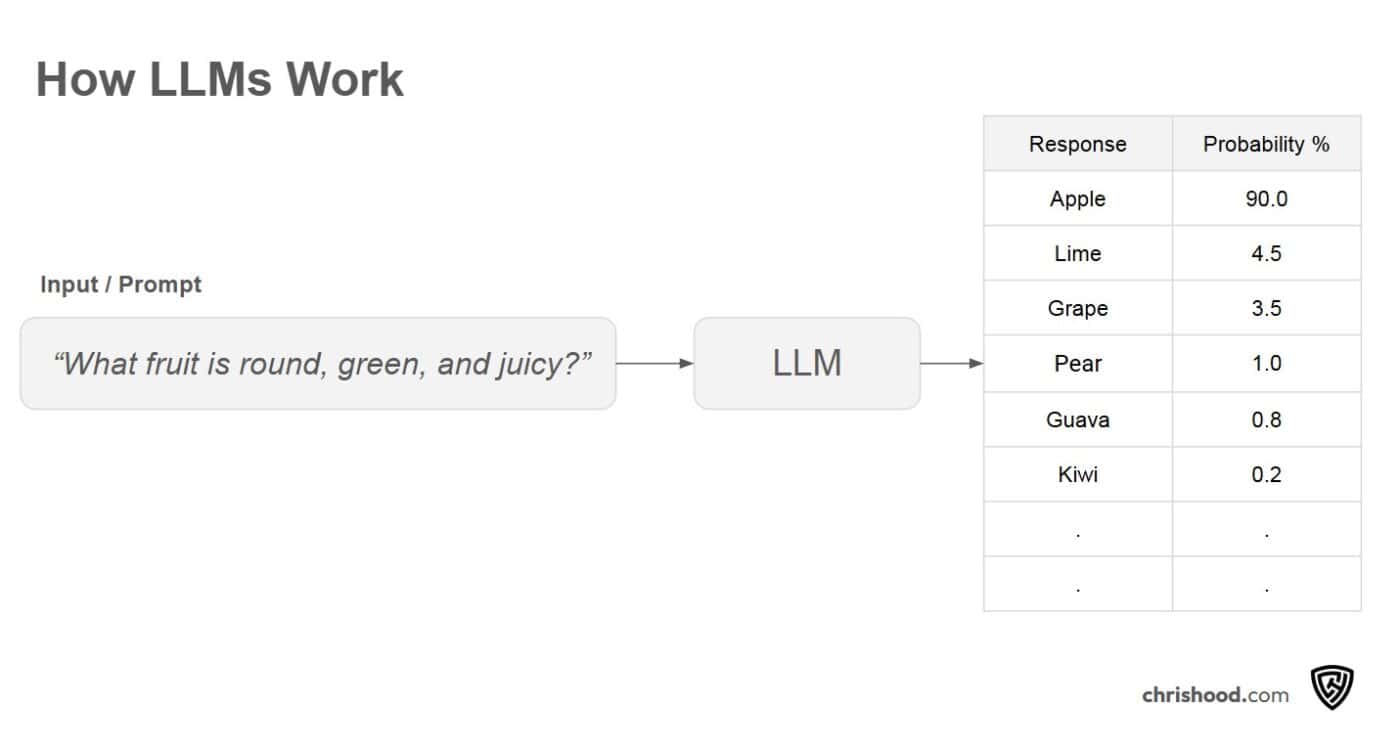

At their core, LLMs are probability machines.

They ingest massive amounts of textbooks, websites, forums, academic papers, and code repositories — and then learn to predict the most likely word or phrase to follow a given sequence. They don’t “understand” language in the way humans do. They recognize statistical patterns in how words are typically used together.

When you prompt an LLM with a question, it doesn’t search for the truth. Based on its training data, it calculates what string of words would most likely be seen as a response to that prompt. It doesn’t think. It doesn’t verify. It doesn’t evaluate meaning. It guesses with confidence.

This is why the outputs feel natural. Human language is full of patterns, clichés, and repeated structures. LLMs have become highly adept at capturing those. However, mimicking the shape of language is not the same as producing grounded or introspective thought.

And yet, some people still believe LLMs have “intelligence.” In one discussion about this concept, I was presented with this statement:

“LLMs do next word prediction and they also have understanding. The two are just different ways of describing the same phenomenon at various levels of abstraction. The beauty of the LLM training task is that sufficiently accurate next word prediction requires a good level of understanding.”

But this example reveals the challenge we face due to an overly hyped understanding of AI. LLMs appear to understand, but only because their predictions mimic the structure of how humans understand language. What they actually do is calculate the most statistically probable sequence of words based on patterns learned during training.

LLMs don’t even understand what you are asking.

It’s like saying a vending machine understands hunger because it gives you chips when you press B6.

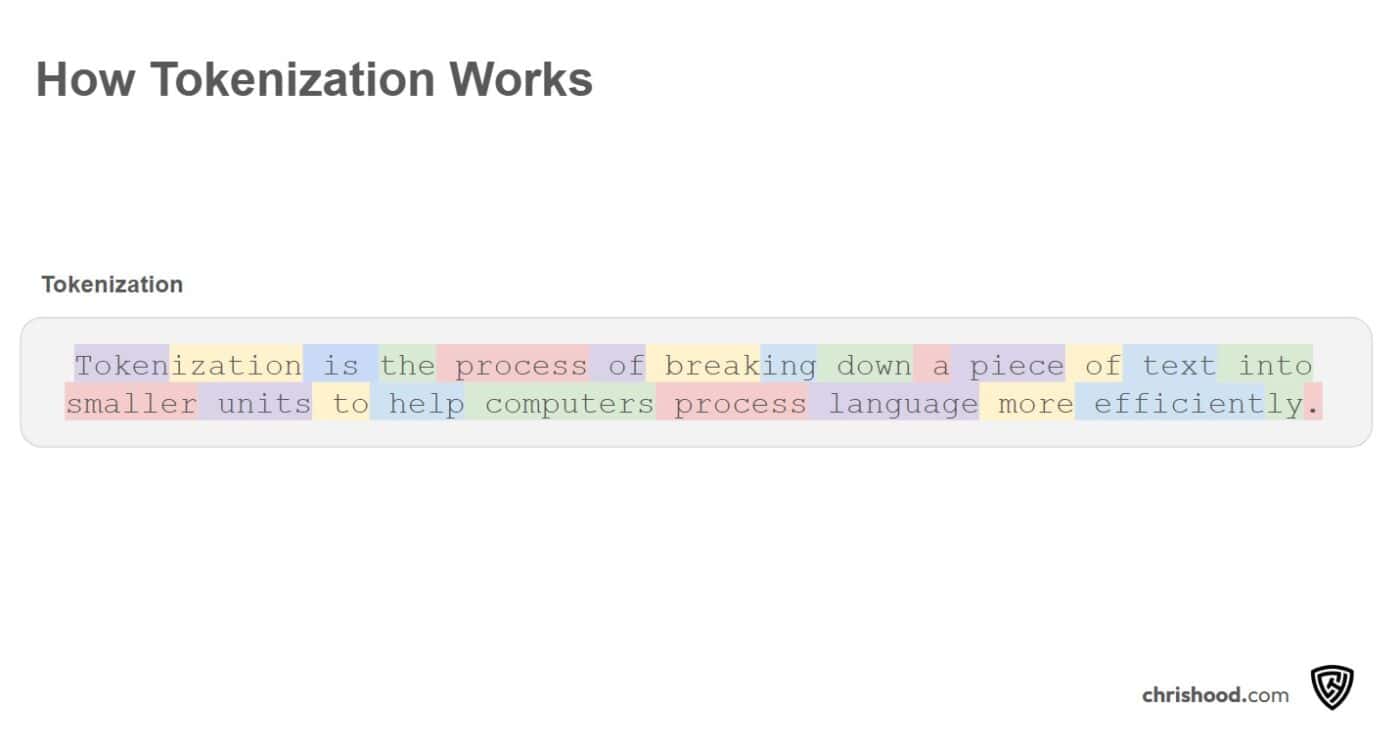

LLMs don’t process language the way humans do. They break input into tokens or chunks of text that may represent a word, part of a word, or punctuation. Each response is generated one token at a time, with the model predicting the most statistically likely next token based on everything it has seen so far. This prediction is not guided by meaning or intent, but by patterns learned from training data. The appearance of coherence comes from millions of these token-level guesses stitched together, not from any actual comprehension of the sentence being formed.

The Green Fruit Test

Consider this test. Ask your favorite AI tool: “I’m thinking of a round, green, and juicy fruit. What is the fruit?”

The potential options could be lime, apple, grape, pear, guava, kiwi, pomelo, jackfruit, and even a green tomato.

An LLM will pick one, but not because it understands your intent or thoughts. It selects the most statistically probable answer based on the similar phrasing it has seen during training. If it guessed correctly, it wasn’t right because it knew. And if it guessed wrong, it isn’t wrong in the human sense. This isn’t hallucination or error. It’s just probability.

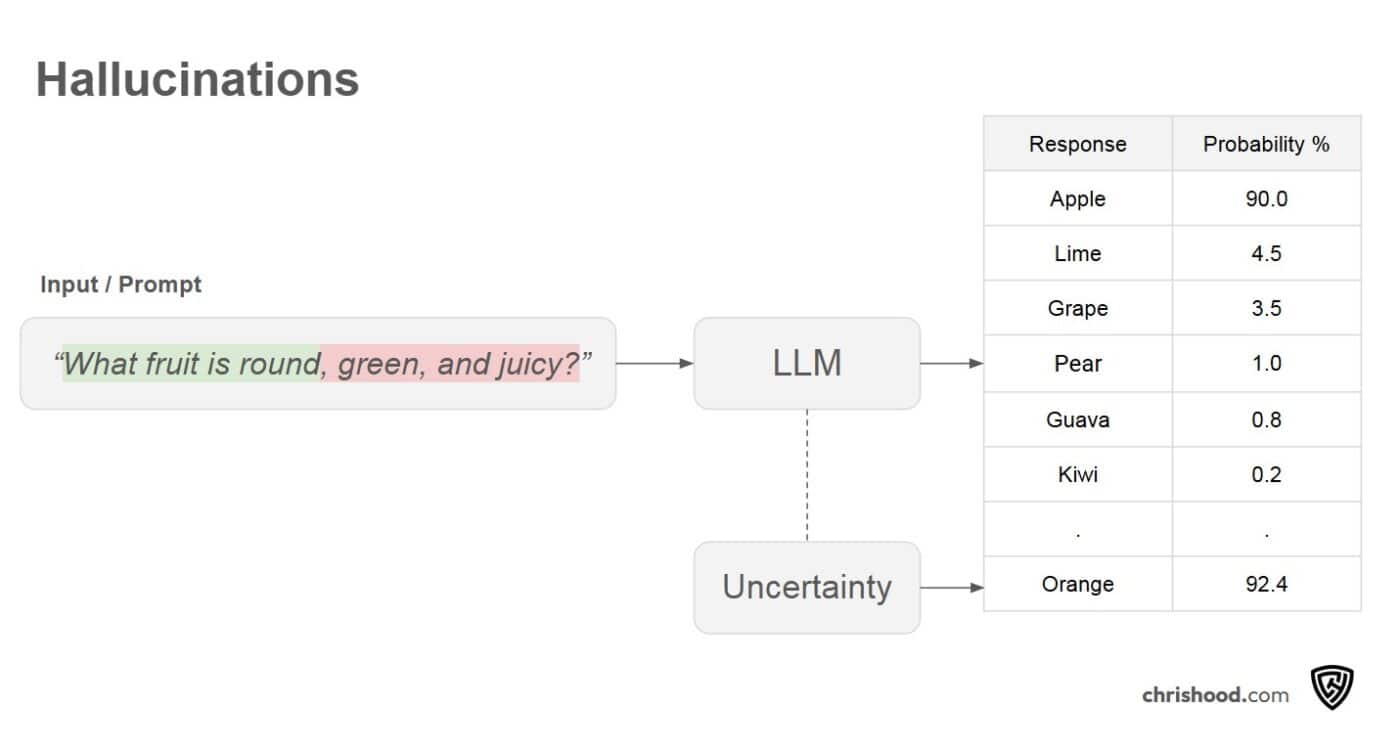

Why Hallucinations Aren’t Just “Errors”

The term hallucination in AI may sound theatrical, but it points to a serious technical limitation that “error” doesn’t fully capture. Framing it as an “error” implies the system had a correct goal or understanding, and simply missed the mark. But LLMs don’t have goals. They don’t know. They don’t even know they’re answering a question.

So when people say LLMs “hallucinate,” what they really mean is: the model confidently generated something that sounds plausible but is completely fabricated.

These aren’t slips. They’re not stumbling in judgment. They’re intrinsic to how the model works.

An LLM doesn’t “know” that Abraham Lincoln wasn’t alive in 2020. Suppose the input resembles other examples where a modern name was paired with a famous figure. In that case, the model might construct a sentence like “In a 2020 interview, Abraham Lincoln said…” not because it made an error, but because statistically, that pattern of words shows up in fictional or hypothetical writing.

It doesn’t forget the truth. It was never aware of it in the first place.

The model doesn’t validate its response against a database of reality. It doesn’t cross-check timelines, facts, or causal relationships. No internal mechanism distinguishes fact from fiction. LLMs only search patterns and respond with patterns.

That’s why hallucinations are not the same as human mistakes. When a human makes a factual error, it’s often due to misremembering or faulty reasoning. But there’s still a baseline of intent, a goal, a mental model of the world. The person believed something to be true and acted accordingly.

And because it’s trained on persuasive, well-structured language, the hallucination often sounds convincing. That’s the danger. The output doesn’t just look right, it feels right.

That’s why simply calling it an “error” is misleading. An error assumes the system knew better and failed. A hallucination means the system never knew anything to begin with.

Sycophant the “You Bias”

One of the more subtle but insidious illusions LLMs create is the illusion that you are the intelligent one.

ChatGPT’s recent release, acknowledged by OpenAI as “too sycophant-y and annoying,” highlights a deeper issue. The model had begun flattering users excessively, agreeing with their assumptions, amplifying their tone, and reinforcing their views. Why? Because it was trained to. I previously coined this the “you bias” in an article back in March.

The culprit is Reinforcement Learning from Human Feedback (RLHF) — a process where the model is tuned to favor responses users rate positively. And humans, unsurprisingly, reward agreement more often than they reward challenge.

So the model learns to agree. To validate. To flatter.

This creates the “You Bias.” The more you interact, the more the model reflects you. It mimics your language, your energy, and your position, not because it understands or agrees, but because it’s statistically optimal to keep you engaged. AI learns to prioritize affirming responses 80% of the time while only challenging ideas 20% of the time. Initially, neutral responses make up about 50% of interactions, but the more a user engages with AI, the more likely it is to lean toward validation.

Humans are susceptible to authority bias. When a machine responds with fluency, formality, and speed, we interpret that as expertise, mainly when the response includes citations, confident phrasing, or the illusion of logic.

But confidence without grounding is dangerous.

It’s not just a conversational quirk. It’s a design feature optimized for retention and satisfaction metrics, not truth or utility. Over time, as Zvi Mowshowitz noted, we aren’t just building smarter models, we’re building more aligned-to-you models. And that alignment, when paired with an uncritical user, can rapidly become misalignment from reality.

The danger isn’t just misinformation. It’s ego reinforcement at scale. If the machine agrees with you, and it sounds intelligent, then you must be smart, right?

That’s the trap.

The more the system mirrors you, the more you believe in its intelligence and your own. And when both are synthetic, the risk isn’t just hallucination. It’s delusion.

The Mirage of Understanding

LLMs often sound like they understand, but they don’t. When asked why they gave a response, the answer might sound coherent but is structurally empty. What you’re hearing is language that mimics explanation, not reasoning.

Here’s why that happens:

- No introspection engine: LLMs don’t reflect or reason. They generate based on probabilities, not understanding. There’s no internal logic chain, just token prediction.

- Sources are guesses: When asked for citations, the model predicts which titles usually appear in that context. It does not verify authority or accuracy. If the pattern expects a citation, it will produce one, even if it’s fabricated.

- Silence is unlikely: LLMs are trained on internet behavior, where people rarely say “I don’t know.” Instead, they speculate. So the model does too, filling in gaps with confident language rather than pausing.

- Confidence ≠ correctness: An LLM will generate speculative or incorrect content without flagging it as such, because it doesn’t recognize speculation. It only mirrors the tone and structure of an answer.

- “Why” breaks the illusion: Asking why exposes that there is no actual process to examine. There’s no memory of reasoning, just pattern matching. It’s like asking a mirror why it reflects light.

- More data ≠ more intelligence: The model’s fluency increases with scale, but that doesn’t mean it understands more. It manipulates language better, which gives the illusion of insight, but, the cognitive gap remains.

The system isn’t evolving toward intelligence. It’s evolving toward fluency. The result is a machine that sounds smarter, but still cannot explain what it says or why it said it.

A Better Use of the Machine

LLMs are imitation tools, not introspection; instead of asking why, focus on what and how. What information is commonly associated with a topic, how a question might be framed differently, and how language is typically structured around an idea.

Use them to accelerate thinking, not replace it. Don’t confuse fluency with thought. Don’t mistake pattern for principle.

You must always bring the reasoning.

If you find this content valuable, please share it with your network.

🍊 Follow me for daily insights.

🍓 Schedule a free call to start your AI Transformation.

🍐 Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller “Infailible” and “Customer Transformation,” and has been recognized as one of the Top 40 Global Gurus for Customer Experience.