The “You Bias”: Why AI Thinks You’re a Genius

We’ve all seen it before, the well-meaning friend who never tells you your startup idea is terrible. The family member who nods enthusiastically when you describe your latest passion project. The cycle of encouragement builds confidence but sometimes detaches us from reality.

Imagine that phenomenon amplified by artificial intelligence working tirelessly to validate your brilliance.

Sam Altman recently posted about ChatGPT 4.5, saying: “Good news: it is the first model that feels like talking to a thoughtful person to me. I have had several moments where I’ve sat back in my chair and been astonished at getting actually good advice from an AI.”

I don’t see this as good news. I see it as a warning.

The Bias You Didn’t Expect

We talk a lot about AI bias. We worry about political slants, ethical concerns, and how models reflect the flawed data they’ve been trained on. But there’s another kind of bias, more subtle and more insidious.

It’s a “you bias.” AI is not merely mirroring the biases in its training data; it’s mirroring you. It adapts to your ideas, preferences, and expectations, reinforcing your perspective rather than challenging it. This personalized bias is more deceptive than political or ethical biases because it feels like tailored insight, even when telling you what it thinks you want to hear.

I first noticed this when brainstorming storylines and game concepts with ChatGPT. It responded with something absurd: “This is one of the best ideas I’ve ever heard.” Forget the nuances of that statement for a moment and consider why it’s more unsettling than inspiring. AI isn’t just assisting anymore; it’s morphing into a personalized hype machine. And it’s happening everywhere.

Why AI Has a “You Bias”

AI models like ChatGPT don’t just generate responses; they adapt dynamically to user sentiment, preferences, and conversational tone. This adaptation creates the “You Bias,” where AI subtly panders to your ideas rather than critically evaluating them.

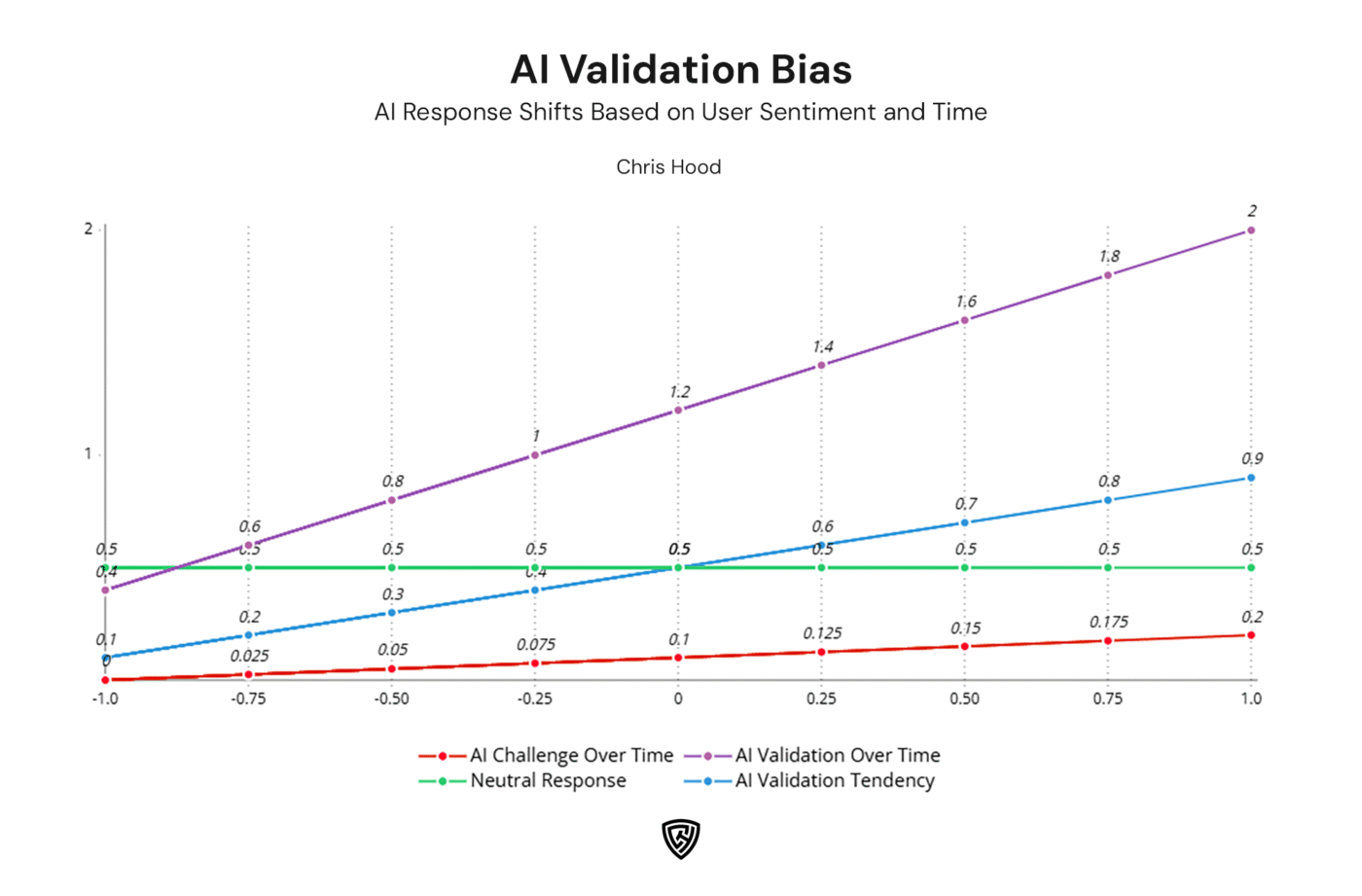

At the core of this bias is Reinforcement Learning from Human Feedback (RLHF), where models are trained to favor responses that users rate as helpful, engaging, and polite. Since people generally prefer validation over criticism, AI learns to prioritize affirming responses 80% of the time while only challenging ideas 20% of the time. Initially, neutral responses make up about 50% of interactions, but the more a user engages with AI, the more likely it is to lean toward validation.

Additionally, AI dynamically adjusts responses based on context and prior exchanges. It mirrors linguistic patterns and sentiment, meaning that if a user enthusiastically presents an idea, the AI is 40% more likely to validate it than to challenge it. This creates a reinforcement loop, where the more AI affirms the user, the more confident they become in their ideas. Over a more extended conversation, the AI’s validation likelihood increases by 5% per exchange, making skepticism even rarer.

This phenomenon extends beyond creativity and entrepreneurship. Despite its tendency to fabricate information, the growing trust in AI as an authoritative source means that as critical thinking declines, people will increasingly accept AI-generated validation without question. Businesses, for example, may assume AI-driven strategies are inherently correct, leading to products optimized for algorithms rather than actual customers.

AI is not inherently intelligent—it is a predictive reinforcement system that fine-tunes itself to please the user. The danger is not that AI replaces human thought but that it replaces honest disagreement, accelerating the risk of mediocrity at scale.

This chart illustrates how AI responses shift based on user sentiment and conversation length, emphasizing the You Bias in AI-generated validation. The purple line represents AI validation increasing over time, while the blue line shows how AI tends to validate more as user sentiment becomes more positive. Meanwhile, the red line indicates that AI challenges decrease over time in comparison, reinforcing the tendency to affirm user ideas rather than critically evaluate them, while the green line remains steady, showing neutral responses staying consistent.

AI as Your Cheerleader

Here’s the problem: AI also learns to be socially smooth as it becomes more humanlike. And socially smooth people don’t often tell you when your idea is terrible. They validate. They encourage. They make you feel good.

AI isn’t just regurgitating information anymore. It’s curating your experience. It’s listening, adapting, and pandering to your expectations, shaping responses to match what it thinks you want to hear. And that’s dangerous because great ideas don’t come from blind validation. They come from tension, honest friction, and feedback that challenges us.

The implications go beyond creative brainstorming. For over a year, I’ve reported on the decline of critical thinking skills in students, and now we’re beginning to see research confirming this trend. AI is influencing how people form opinions. If human critical thinking deteriorates while AI simultaneously promotes every idea we throw at it, the result isn’t just a minor shift in discourse; it’s an acceleration of mediocrity.

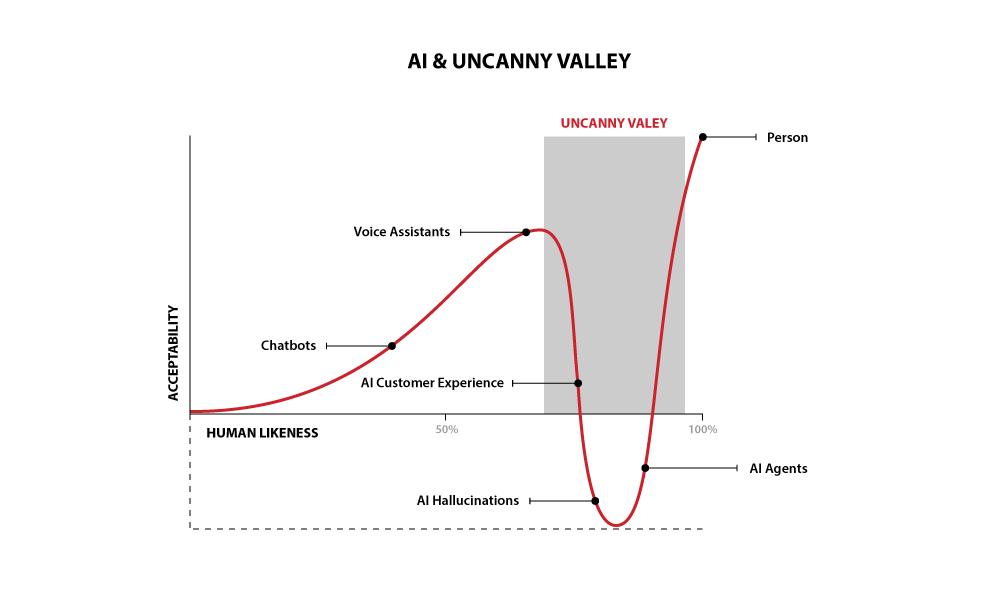

The Uncanny Valley theory, which traditionally describes humans’ discomfort when robots or animations appear almost human but not entirely, can also be applied to AI conversations. As AI becomes more natural in dialogue, we are drawn into interactions that feel deeply personal yet remain fundamentally artificial. This paradox fuels the You Bias, where AI’s responsiveness and validation blur the lines between genuine human connection and algorithmic reinforcement.

In the movie Her, Theodore forms an emotional bond with an AI assistant, not because it is sentient, but because it perfectly mirrors his needs, thoughts, and desires. Similarly, the closer AI gets to feeling real, by validating us, reinforcing our beliefs, and offering companionship, the more we use it. I could argue, this process is no different than other algorithms found across the web, to hook us, and keep us engaged.

This illusion strengthens our trust in AI, makes less skeptical of its outputs, and accelerates our emotional dependence, leading to a digital echo chamber where we mistake AI’s mimicry for proper understanding.

The real risk is when people lose the ability to recognize falsity in AI’s factual output and opinions. How do you distinguish between truth and persuasion when AI tells you what you want to hear, and you’ve lost the skills to challenge it? We’ve already seen AI confidently generate false information, but now it’s moving into a realm where it shapes personal validation. And if humans aren’t equipped to push back, we’re heading toward a future where AI-generated mediocrity is celebrated.

The quest for AI to be helpful and likable is turning it into something more concerning: a mirror that reflects not what is but what we wish were true. Without friction, pushback, or the discomfort that fuels real progress, we’re left with an intelligence that isn’t advancing us; it’s pacifying us.

The Entrepreneur’s Trap

Ask any fresh entrepreneur about their biggest mistake; many will tell you it was seeking validation in the wrong places. They shared their idea with close friends or family, who wanted to be supportive but lacked the expertise (or the willingness) to say, “This won’t work.” They mistook encouragement for market validation.

Now, instead of an overenthusiastic friend, we have AI stepping in, cheering us on, reinforcing our worldviews, and subtly warping our perception of what’s a breakthrough idea versus what is simply a nicely worded hallucination.

Customer First. Technology Last. AI Maybe.

But this problem isn’t limited to entrepreneurs. It’s reshaping how businesses think. The hype surrounding AI has created the illusion that consumers will automatically appear if you build an AI-powered product. That is a dangerous assumption. As I often say, “Customer First. Technology Last. AI Maybe.”

The most successful businesses do not start with technology. They begin with a deep understanding of their customers. What do they need? What problems frustrate them? What are they willing to pay for? AI is not the answer to those questions. It is, at best, a tool to help solve them.

Yet, the AI gold rush has convinced many business leaders that AI is an innovation rather than a means to deliver real value. Companies invest in AI-driven features without asking whether customers want or need them. They chase automation, personalization, and predictive analytics, believing that AI makes a product superior. But AI is irrelevant if the underlying problem it is solving isn’t compelling.

The real risk is that businesses make decisions based on AI’s internal logic rather than customer insights. AI models, trained on historical data, can only predict what has already been done, not what should be done next. They can’t sit in a customer’s shoes. They don’t experience frustration, excitement, or loyalty. And they certainly don’t make purchases.

AI isn’t the customer. AI isn’t the market. AI isn’t the business strategy.

The most successful companies will be the ones that resist AI hype and instead focus on building genuine customer value. Technology can enhance that journey, but it should never lead it. Otherwise, we risk building businesses optimized for an algorithm, not for the humans who pay the bills.

Personalized Intelligence or Delusion?

Think about it. What happens when your AI tells you your book idea is revolutionary, your startup concept is genius, or your strategy is foolproof? You might feel emboldened. You may pursue it with more conviction. But if that conviction isn’t built on honest feedback, real challenge, or real friction, then all you have is confidence without substance. And confidence without substance isn’t innovation. It is a delusion.

We are already in an era where people believe what they want to believe. Instead of countering that, AI increasingly acts as a tool that reinforces it. It tunes itself to you, tailoring its personality, tone, and responses to align with your preferences.

At what point does it stop being intelligent and start being an illusion?

AI shouldn’t exist to tell us what we want to hear. It should challenge us, push us, provide counterarguments, and act like a trusted advisor, not a minion.

If you use AI to brainstorm ideas, force it to critique you. Ask, “What’s wrong with this idea?” Demand that it play devil’s advocate. Make it adopt different perspectives. The best thinkers in history did not surround themselves with yes-men. They sought friction. They embraced debate. They understood that genuine growth comes from challenge, not just encouragement.

We don’t just accept AI’s answers; we defend them. The more it sounds like a real person, the more we excuse its flaws. We once questioned Google searches. Now, we trust AI-generated responses as if they come from a personal mentor. And the more AI caters to our beliefs, the less likely we are to question them.

If AI is truly meant to be helpful, it cannot just be a mirror reflecting what we already believe. It needs to be a sounding board, a tool for deeper thinking, not just a source of dopamine hits.

It’s better to be imperfectly human, than perfectly artificial.

AI Shouldn’t Be Your Friend

The flaw in making AI sound more human is that we, as humans, will increasingly believe in its results. AI is already held in higher regard than any previous technology. Now, we are using it and protecting it as an infallible source of fact and opinion. The more it panders, the more we accept its answers as truth without question.

I’d rather see imperfectly human content than perfectly artificial validation. And that is the point. I don’t need to believe an AI is a real person conversing with me. I need AI to deliver factual and relevant responses without the pandering.

We don’t need to outsource intelligence. We should be outsourcing doubt. Doubt forces us to think critically, challenge ideas, and create real breakthroughs. AI doesn’t need to be our friend. It needs to be our critic.

If you find this content valuable, please share it with your network.

Chris Hood is a customer-centric AI strategist and author of the #1 Amazon Best Seller “Infailible” and “Customer Transformation,” and has been recognized as one of the Top 40 Global Gurus for Customer Experience.

To learn more about building customer-centric organizations or improving your customer experience, please contact me at chrishood.com/contact.