Autonomy in AI is About Governance, Not System Automation

“Bounded autonomy” is a phrase that appears whenever someone senses you are about to point out that the system in question has approximately the same autonomy as a toaster with opinions. It is also a phrase that, in just two words, manages to argue with itself, lose, and then keep speaking anyway.

The core problem is that the AI industry has mistaken automation for autonomy, possibly because both involve machines doing things without asking permission, and no one stopped to check who had decided what those things were in the first place.

Automation describes what a system does. It executes tasks without manual intervention. A scheduled report is automated. A chatbot response is automated. A workflow that triggers based on conditions is automated.

Autonomy describes how decisions are governed. Who makes the rules? Who determines what actions are permissible? Who holds authority over the system’s behavior?

When vendors say “autonomous AI,” they almost always mean “automated AI.” The system performs tasks on its own. But performing tasks on its own isn’t autonomy. It’s automation operating under human-defined governance.

This confusion is not limited to vendors. In most conversations, when people describe an “autonomous” system, they are describing a system that does something on its own. It triggers actions without human intervention. It runs workflows. It makes decisions without waiting for approval. That is not autonomy. That is automation. The system is acting independently, but it is not governing independently. The rules were still written elsewhere.

Real autonomy would mean the system governs itself. It would write its own rules, interpret them generously, and then look at your compliance checklist with the detached curiosity of a cat regarding a vacuum cleaner.

It makes its own rules. It determines its own constraints. It operates on its own judgment, not on parameters established by external authorities.

We don’t have that. We’re not close to that. “Bounded autonomy” is an attempt to claim we have autonomy while simultaneously confessing, right there in the adjective, that we absolutely do not. It is the linguistic equivalent of saying, “This bridge is structurally sound, except for the parts where it isn’t.”

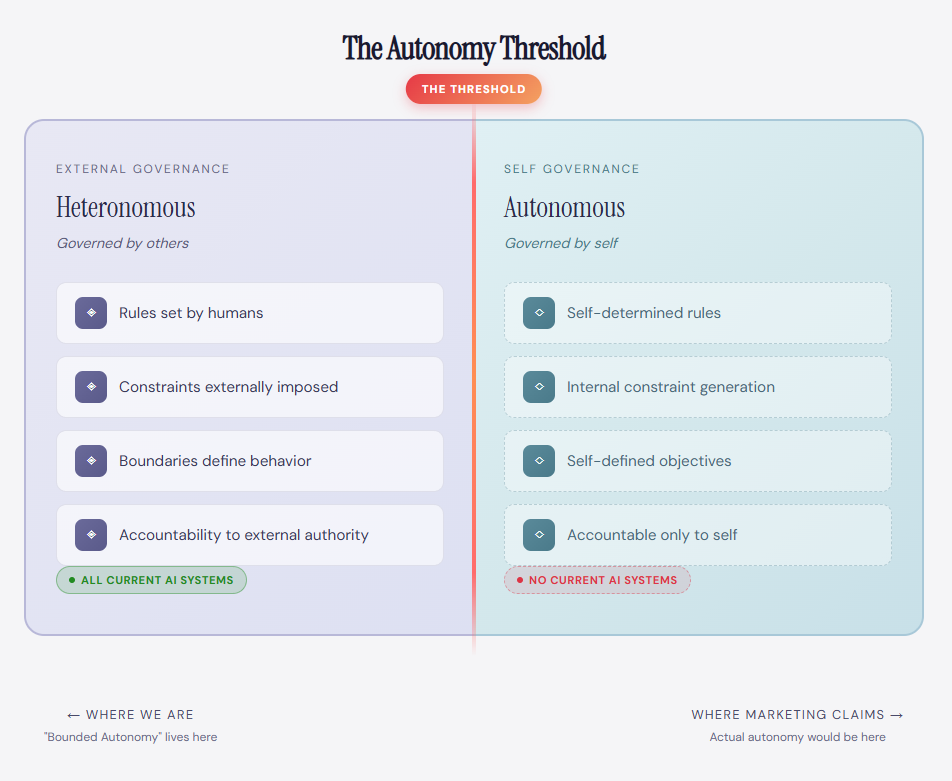

The Binary Reality: Heteronomous vs. Autonomous

I propose we stop pretending there’s a spectrum and acknowledge the binary, if only so we can all go home earlier and stop arguing about how autonomous something is while still holding its leash.

A system is either heteronomous or autonomous.

Heteronomous means governed by external rules. The term comes from the Greek roots heteros, meaning other, and nomos, meaning law. Other-governed. Subject to rules imposed from outside the system.

Autonomous means self-governed. From autos, meaning self, and nomos, meaning law. The system determines its own rules and operates according to them.

There is no middle ground. Either external authorities govern the system, or the system governs itself.

What is commonly called “bounded autonomy” is, in fact, heteronomy. The bounds are not limits placed on autonomy. They are the governance itself. The moment constraints are imposed by an external authority, the system becomes heteronomous, regardless of how complex or capable its automation may be within those constraints.

This is the premise of the Autonomy Threshold Theorem. There exists a threshold between heteronomous and autonomous systems, and current AI has not crossed it. Every system marketed as “autonomous” today remains on the heteronomous side of that threshold. The language used to describe these systems may blur this distinction, but it does not alter the underlying reality.

Here is the test. If a human can revoke the system’s authority, the system has not crossed the autonomy threshold.

What vendors label “bounded autonomy” is better described as bounded governance combined with sophisticated automation. The system is governed externally, and its behavior is constrained by rules it did not create.

In other words, heteronomy is bounded governance. Calling it autonomy does not make it so.

The terminology shifts. The condition does not.

If Not a Spectrum, Then What?

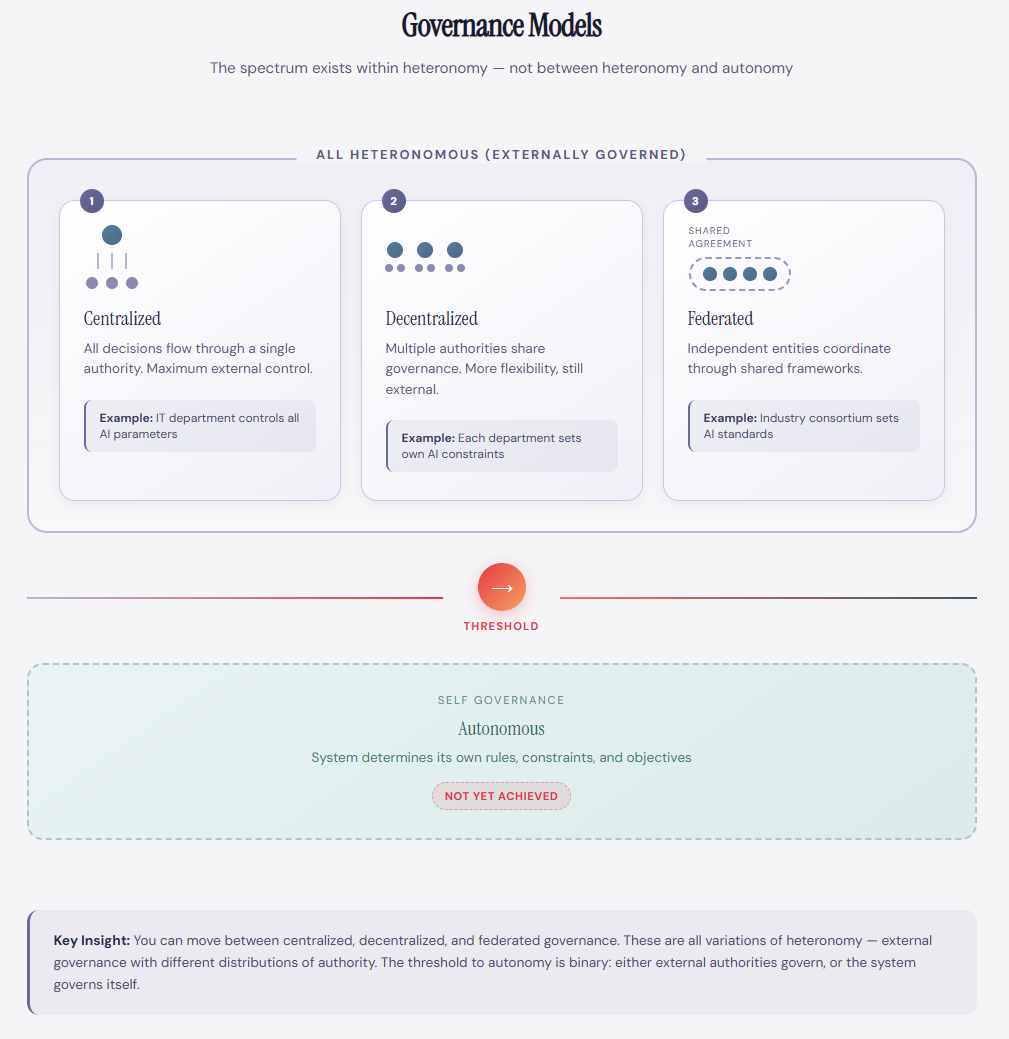

Some will object: surely there are degrees of governance? Surely some systems have more independence than others?

Yes, but that’s a spectrum of delegation, not a spectrum of autonomy.

Consider governance models we already understand:

Centralized Governance: All decisions flow through a single authority. Maximum external control. The system does exactly what the central authority permits, nothing more.

Decentralized Governance: Decision-making is distributed across multiple authorities. More flexibility, but still externally governed. The system operates within rules established by the collective authorities.

Federated Governance: Independent entities coordinate through shared agreements. Each entity exercises local authority within a broader framework. The system remains externally governed because the federation defines the rules.

Bounded Governance: External authorities define the system’s constraints, objectives, and limits, then delegate broad operational freedom within those bounds. The system may act independently inside the constraints, but it does not define them. Governance remains external.

Autonomous Governance: The system determines its own rules. No external authority imposes constraints. It is self-governing in the full and literal sense of the term.

Current AI systems operate within the first three models. None operates within the fourth.

A system described as having “bounded autonomy” may use decentralized governance, with multiple teams defining different constraints. It may use federated governance, with stakeholders agreeing on shared parameters. In every case, it remains heteronomous. External authorities continue to govern.

If a spectrum exists, it runs between governance models, not between heteronomy and autonomy. You can move from centralized to decentralized to federated governance. You cannot move gradually from heteronomy to autonomy. A system is either externally governed or it is not.

The Governance Problem No One Discusses

Here’s where the conversation gets interesting—and where the “autonomous AI” marketing falls apart completely.

Consider what governance actually covers in enterprise systems:

- Data quality: Who ensures inputs meet standards? Who defines those standards?

- Policy compliance: Who verifies that the system follows regulations? Who updates constraints when regulations change?

- Asset management: Who controls what resources the system can access? Who audits that access?

- Security: Who defines threat models? Who implements controls? Who responds to breaches?

- System processes: Who determines operational parameters? Who monitors performance? Who intervenes when something fails?

Now imagine a truly autonomous system, one that governs itself. It decides what “good data” means. It interprets regulations in what it considers a reasonable way. It allocates resources based on internal priorities that make perfect sense to it and absolutely no sense to your audit committee.

Who ensures data quality? The system itself, by its own standards.

Who verifies policy compliance? The system itself interprets regulations according to its own judgment.

Who controls asset access? The system itself determines what it needs.

Who handles security? The system itself, defining and responding to threats as it sees fit.

Who monitors system processes? The system itself decides what constitutes acceptable performance.

Do you see the problem?

A genuinely autonomous AI system would need to govern itself across all these dimensions. It would set its own data quality standards, interpret its own compliance requirements, determine its own resource access, implement its own security controls, and monitor its own processes.

No enterprise would deploy such a system. No regulatory framework would permit it. No insurance policy would cover it.

The moment you impose external governance on any of these dimensions, and you will, because you must, you have a heteronomous system. The system operates under external rules. It might have sophisticated automation within those rules, but the governance remains external.

The Autonomy Paradox

This creates a paradox that the AI industry refuses to acknowledge.

If a system became truly autonomous, the question of how to regulate it would become irrelevant in the same way that traffic laws become irrelevant to meteors.

How would it be controlled? How would it be held accountable?

The answer is: it wouldn’t be. That’s what autonomy means. Self-governance means no external governance. No external regulation. No external control.

The moment we impose regulation, control, or accountability mechanisms, autonomy disappears. The system is no longer self-governing. It becomes heteronomous, governed by external rules, even if those rules are few and lightly enforced.

So what does “bounded autonomy” mean in this context?

It means a heteronomous system with substantial delegated automation.

The bounds are the external governance. The so-called ‘autonomy’ is marketing-speak for automation. The system remains under external control. The only difference is that the control allows a broader range of automated actions.

That’s not a new category of AI. That’s every enterprise AI system that has ever been deployed.

Clearer Language for Honest Conversations

If we want productive conversations about AI governance, and we very much need them, we need language that describes what is actually happening rather than what sounds impressive in a slide deck.

Stop saying “autonomous” when you mean “highly automated.” A system that executes complex workflows without human intervention is automated. That is already a powerful capability. It does not become more impressive by borrowing a word it has not earned.

Stop saying “bounded autonomy” when you mean heteronomous governance with delegated automation. The system is externally governed. The bounds are not a feature added to autonomy. They are the governance itself. Pretending otherwise does not change the architecture.

Use “heteronomous” deliberately. It is a precise term that accurately describes every AI system in operation today. External rules govern the system’s behavior. This is not a shortcoming. It is a prerequisite for deployable enterprise technology.

Reserve “autonomous” for its actual meaning. If we ever build systems that genuinely govern themselves, that determine their own rules, constraints, and objectives, we will need the word then. Until that day arrives, we should stop spending it on marketing copy for systems that remain very firmly under human control.

Be specific about governance models. Is your AI centrally governed? Decentrally governed? Federated? Each model has different implications for flexibility, accountability, and control. These distinctions matter more than the false binary of “autonomous vs. not autonomous.”

Where This Leaves Us

Current AI systems are heteronomous. All of them. Without exception.

They operate under external governance. Rules, constraints, parameters, and boundaries are established by human authorities. The scope of their delegated actions varies. But the governance model itself does not vary. External authorities decide. The system carries out those decisions.

“Bounded autonomy” is a marketing phrase designed to blur this distinction. It borrows the prestige of autonomy while conceding, quite openly in the word “bounded,” that external governance is still doing the governing.

The honest conversation we need isn’t about which systems are more or less autonomous. It’s about which governance models are appropriate for which AI applications. Centralized governance for high-risk decisions. Decentralized governance for distributed operations. Federated governance for multi-stakeholder environments.

And maybe, eventually, genuine autonomy for systems we trust to govern themselves.

We’re not there yet. We’re not close. “Bounded autonomy” doesn’t move us forward. It merely gives confusion a badge and a budget.

If you find this content valuable, please share it with your network.

Follow me for daily insights.

Schedule a free call to start your AI Transformation.

Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller Infailible and Customer Transformation, and has been recognized as one of the Top 40 Global Gurus for Customer Experience. His latest book, Unmapping Customer Journeys, will be published in April 2026.