Today’s Gen AI is the Most Dangerous Drug on the Planet

A Note on Terminology: When I refer to AI throughout this paper, I’m speaking specifically about Generative AI, AI agents, and the increasingly mythical beliefs people hold about agentic AI systems. This paper is not a blanket condemnation of the broader AI research landscape, where genuinely beneficial work continues in fields such as cancer detection, climate modeling, drug discovery, and medical diagnostics. The critique here focuses on the consumer-facing AI tools positioned as cognitive enhancement, including chatbots, writing assistants, and automated thinking systems that promise to make us smarter while quietly making us dependent.

We’ve spent decades fighting substance abuse, understanding how addiction rewires the brain, how tolerance builds, how “just once” becomes a dependency. We’ve learned to recognize the warning signs: the rationalization, the gradual erosion of discipline, the lie that we’re different, that we can handle it.

Yet right now, we’re mainlining the most potent cognitive drug ever created, and we’re calling it productivity.

Artificial intelligence fundamentally alters how our minds work, and, like any potent drug, it comes with a pattern of use that follows a disturbingly familiar trajectory. We start with caution and control. We promise ourselves boundaries. We tell ourselves we’re using it responsibly, that we’re the exception to the rule.

We’re not.

The Dealer’s Promise

The pitch for AI sounds reasonable enough: augment our thinking, handle the mundane, democratize expertise. It’s positioned as an enhancement, not a replacement, a cognitive supplement that makes us sharper, faster, better. Every addictive substance makes identical promises: enhanced performance with minimal effort.

And like most drugs, AI delivers on these promises at first. You ask it to help with research, and suddenly, you have synthesized information that would have taken hours to compile. You use it to draft an outline, and the structure appears instantly. You lean on it to refine your writing, and your prose becomes cleaner, tighter, more polished.

The initial high is extraordinary. You feel empowered. Capable. Efficient beyond your natural limits.

But something insidious is already happening beneath the surface.

The Classroom Laboratory

I’ve watched this drug take effect in real-time. Over the past two years of teaching, I’ve observed a phenomenon so stark it’s impossible to ignore: a direct, measurable correlation between increased AI usage and declining academic performance.

A 2024 study published in the International Journal of Educational Technology in Higher Education found that increased reliance on ChatGPT was associated with higher levels of procrastination and memory loss, and a negative impact on academic performance as reflected in students’ grade point averages.

Students submit polished assignments that meet all structural requirements. Then they sit for exams on the same material and can barely construct a coherent paragraph. When questioned about their submissions, they can’t explain their arguments or defend their thesis. They’ve become skilled at prompting AI and copying output. They haven’t become educated.

A 2025 Stanford study of 18 U.S. high schools found that students who used generative AI for more than 30% of assignments scored 14–19% lower on exams requiring original analysis than peers who used it minimally or not at all (Lee et al., Stanford Graduate School of Education, 2025).

Yes, our educational systems need reform. Traditional pedagogy has flaws. But this observation, however valid, serves primarily as a rationalization or another way of avoiding the central truth: humans seek the path of least resistance, and AI has made that path devastating to cognitive development.

The easy way out has always been tempting. AI has made it irresistible. And my gradebook provides the empirical evidence of the consequences.

The Tolerance Curve

Here’s what the first week looks like: careful questions, immediate verification, cross-referencing claims, diving deeper into gaps, synthesizing AI input with your own knowledge. A complete ten-step validation process. You’re in control. You’re using it right.

By week two, you’re down to nine steps. The verification process gets shorter because, well, the AI was right last time. By week three, it’s eight steps. You trust the output just a bit more. The curiosity that drove deeper investigation starts to feel like unnecessary effort when the answer is already good enough.

This is pharmacology.

A 2025 MIT Media Lab study using EEG technology found measurable evidence of ‘cognitive debt accumulation’ when participants used LLM assistants for essay writing, demonstrating actual changes in brain activity patterns.

Your brain is experiencing what any substance abuse specialist would immediately recognize: tolerance and dependency formation. The neural pathways associated with effortful thinking are receiving less stimulation.

Meanwhile, the reward circuits activated by instant answers are firing repeatedly. Your brain is learning, at a neurological level, that the AI pathway provides reward with minimal effort.

The drug isn’t just effective. It’s too effective. And that’s precisely the problem.

The Myth of “My Way Is Different”

I hear it constantly: “But for me, how I use it…” followed by a detailed explanation of someone’s careful, thoughtful, responsible AI practice. They describe their rigorous verification process, their critical thinking framework, and their disciplined approach to maintaining cognitive sovereignty.

I believe they’re sincere. I think they currently practice what they preach.

I also believe they’re already losing the battle, and they don’t realize it yet.

The fundamental flaw in the “I use it responsibly” argument is about the inevitability of degradation. The nature of AI as a cognitive drug makes responsible use unsustainable over time. The tool itself contains the mechanism of its own abuse.

Consider what “responsible use” requires: You must maintain constant vigilance against the tool’s core value proposition. AI’s power lies in reducing cognitive load, yet responsible use demands you ignore this benefit and maintain maximum cognitive load anyway. You must resist the exact temptation the tool is designed to exploit, the human desire to minimize effort while maximizing output.

It’s like the driver who insists they’re safe because they always drive the speed limit. Yet, accidents still happen, even to careful drivers, because risk is inherent in the activity itself. Or the smoker who knows all the statistics about lung cancer but continues lighting up, convinced that somehow the rules of probability won’t apply to them. They’re not wrong about their current behavior. They’re wrong about their ability to maintain it indefinitely in the face of compounding temptation and diminishing returns.

We’ve created a codependency with AI. Ask yourself honestly: If AI disappeared tomorrow, how would you feel?

- Relieved? Probably not.

- Anxious? More likely.

- Panicked? If you’re being sincere, possibly.

That emotional response tells you everything you need to know about whether you’re using a tool or serving an addiction. Tools are useful when available, but not essential to function. Dependencies create withdrawal, anxiety, and the fear that we can no longer perform basic tasks without them.

Big Tobacco 2.0: The Industry Selling Our Cognitive Decline

As I explore in my book Infailible, the ideological belief that AI is inherently beneficial ignores the mounting evidence of cognitive harm and dependency. For decades, tobacco companies promoted cigarettes as sophisticated and healthy while funding research to muddy health risks. They knew the product was deadly. They sold it anyway, insisting that responsible use was acceptable. We’re watching the same script with AI, except the stakes are higher and the deception more insidious.

Tech companies are pouring resources into positioning AI as an unqualified good, a democratizing force, an educational revolution, the key to human flourishing. They’re embedding it into every platform, every workflow, every aspect of daily life. They’re making it unavoidable, then telling us that resistance is futile, that we’ll be left behind if we don’t embrace it fully.

Meanwhile, the evidence of cognitive harm accumulates. Students can’t retain information. Workers can’t solve problems without digital assistance. People lose the ability to write coherent thoughts without algorithmic intervention, and critical thinking skills atrophy. Attention spans collapse. Independent reasoning becomes a specialty skill rather than a basic human capacity.

What We’re Actually Losing

The destruction is about dismantling the cognitive infrastructure that enables independent thought.

When you habitually turn to AI for brainstorming or argument structure, you atrophy your capacity to generate novel connections and recognize weak logic. The neural networks that fire during cognitive struggle need activation to maintain strength. Skip the battle, and the networks weaken.

When you default to AI for research synthesis, you forfeit the discovery process where accurate understanding emerges. Learning is about the cognitive work of integration, the moment when scattered facts suddenly cohere into comprehension. AI provides the end product but eliminates the process that creates actual knowledge.

Most critically, when you use AI to refine your expression, you surrender the hard-won skill of translating thought into language. The loss runs far deeper than prettier sentences. The act of articulation is itself a form of thinking. We don’t fully understand our own ideas until we force them through the narrow channel of words. AI short-circuits this process, leaving us with polished output but impoverished understanding.

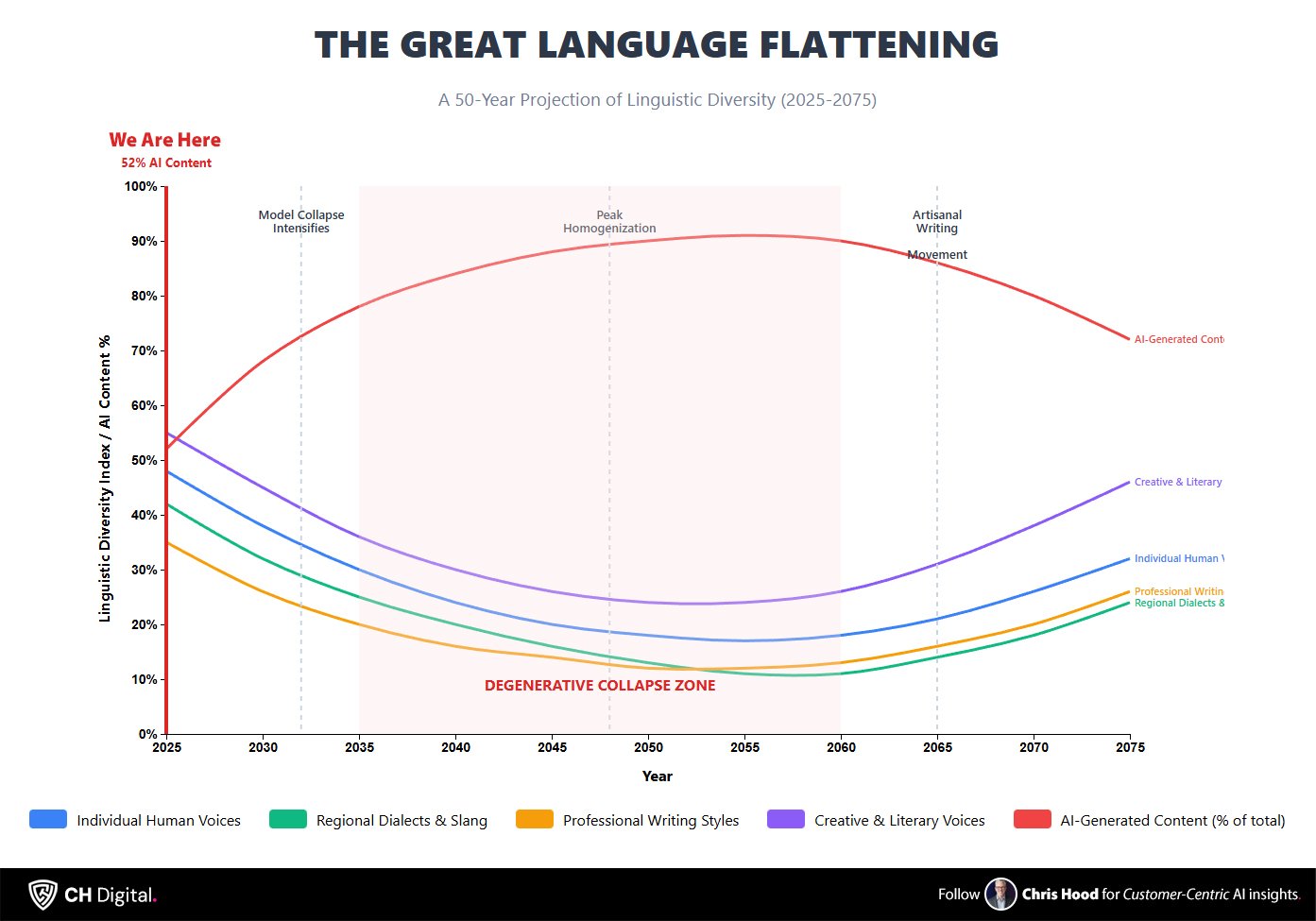

Beyond individual cognitive decay, we’re witnessing something even more insidious: the systematic flattening of human expression itself. Over 52% of online content is now AI-generated, and when AI trains on AI-generated content, it leads to model collapse. Each generation of AI trained on increasingly AI-polluted data produces outputs that converge toward the statistical mean, the probabilistic average, the linguistic equivalent of beige. We’re losing regional dialects, cultural expressions encoded in language, the unexpected connections that spark innovation, and the idiosyncratic voices that define authenticity.

Every “Here’s what you need to know” opener, every “Let’s dive in” transition, every em-dash pattern you now see everywhere, these are symptoms of a broader compression of human expression into algorithmically safe, statistically probable patterns. We’re not just outsourcing our thinking. We’re homogenizing our capacity to express anything that deviates from what the algorithm expects.

There Is No Safe Dose

We arrive at the most brutal truth: There isn’t really a better way to use it. Not in contexts where the goal is the development and maintenance of human cognitive capability.

The fundamental nature of AI as a cognitive tool creates an impossible dilemma. Used minimally, it provides little benefit, making its use questionable. Used extensively, it causes measurable harm to independent thinking capacity. The middle ground is thermodynamically unstable. It cannot hold.

The drug metaphor extends further: Just as there’s no responsible way to use methamphetamine to enhance productivity despite its technical effectiveness, there’s no sustainable way to use AI to improve cognition without accepting cognitive degradation as the price.

AI does have legitimate uses, of course. Industrial automation, data processing, and pattern recognition in massive datasets are contexts where AI augmentation makes sense, because the alternatives are human cognitive impossibility, stagnation, or worse: collapse. We can’t manually process petabytes of data. We can’t personally monitor millions of financial transactions for fraud patterns.

Strategic AI implementation in enterprise contexts, with clear boundaries, governance, and specific use cases, differs fundamentally from the unconstrained cognitive offloading we’re witnessing in education and personal productivity. But when the alternative is human thinking, AI becomes the enemy of the goal it claims to support.

The Choice We’re Not Making

The threshold has already been crossed. We’re choosing convenience over capability and efficiency over independence. Protecting our minds demands accepting limits, embracing struggle, and resisting effortless output when it erodes our ability to think. AI must be treated as something we control with restraint, not something we consume without question.

The short-term discomfort of doing our own cognitive work outweighs the long-term cost of losing the capacity for it. Our growing dependence thrives behind rationalizations and encouragement from every institution around us. The real danger lies in losing the ability to recognize what we’ve surrendered before we can admit it.

If you find this content valuable, please share it with your network.

🍊 Follow me for daily insights.

🍓 Schedule a free call to start your AI Transformation.

🍐 Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller “Infailible” and “Customer Transformation,” and has been recognized as one of the Top 40 Global Gurus for Customer Experience.