The Autonomy Myth: Every AI System Is Just Good Automation

We need to talk about a word hijacked by marketing departments and venture capitalists, and regurgitated across social media by anyone completely unaware of the truth: autonomous. From “autonomous vehicles” to “autonomous agents” to “autonomous AI systems,” the term has become so ubiquitous in technology discourse that we’ve stopped questioning what it actually means.

There are no autonomous AI systems today. Not one.

What we have instead is increasingly sophisticated automation masquerading as autonomy, and the distinction has profound implications for how we build, deploy, and regulate these technologies.

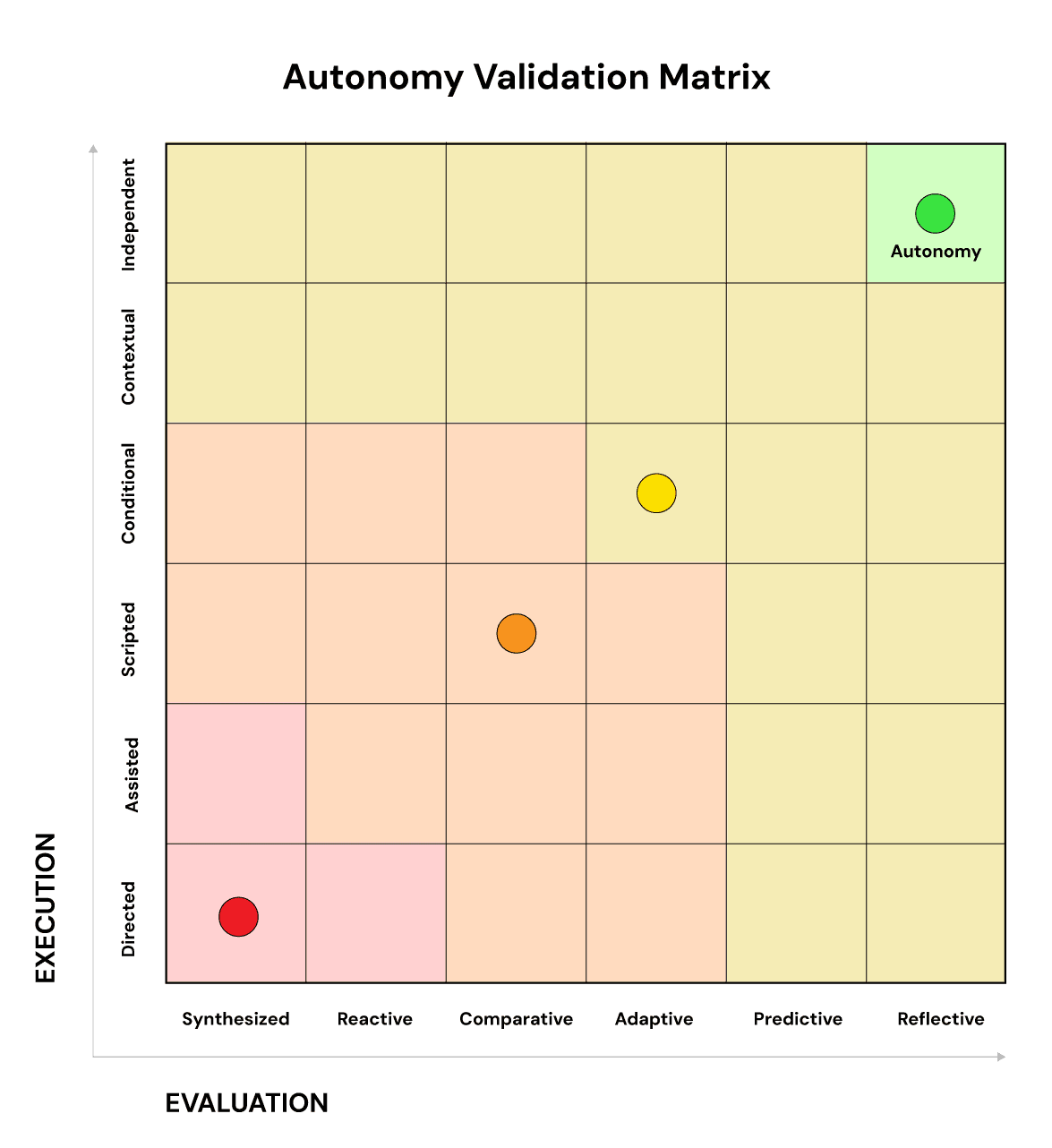

The Execution-Evaluation Framework

To understand why current AI systems aren’t autonomous, we need to examine two fundamental components of any decision-making system: execution and evaluation.

- Execution is what a system does, the actions it takes, the outputs it generates, the decisions it implements.

- Evaluation is how a system determines whether those executions were successful, appropriate, or aligned with intended outcomes.

In truly autonomous systems, both execution and assessment would be self-determined. The system would not only act independently but would also independently assess the quality, appropriateness, and consequences of those actions using its own evolving criteria.

At this point, I usually encounter the rhetorical escape hatch: “Well, I agree it’s not human-level autonomy,” or “Of course no system can do that, but we’re not talking about that type of autonomy.” But here’s the problem: there is no other type of autonomy. It doesn’t come in flavors or degrees. When you have to qualify the word with “limited,” “narrow,” “bounded,” or “non-human,” you’re no longer describing autonomy. You’re describing automation with extra steps. The very need to add these qualifiers proves the point.

Current AI systems excel at execution within predefined parameters. A large language model can generate remarkably sophisticated text. An image recognition system can classify objects with impressive accuracy. A recommendation engine can predict user preferences with uncanny precision. But here’s what none of them can do: independently evaluate whether their execution was actually good in any meaningful sense beyond the narrow metrics we’ve programmed them to optimize.

Consider ChatGPT or any other large language model. It executes remarkably well, generating human-like text across countless domains. But its evaluation function is entirely extrinsic. It was trained on human feedback, optimized against human-defined loss functions, and operates within guardrails established by human designers. It has no capacity to step back and ask, “Was that response actually helpful? Did I consider the long-term implications? Should I have approached this differently?” It optimizes for the evaluation criteria we built into it, nothing more.

The Myth of Autonomy Levels

To be clear, in systems:

- There is no semi-autonomous.

- There are no levels of autonomy.

- There is no limited autonomy.

- There is no bounded autonomy.

The industry loves to talk about “levels of autonomy,” particularly in the context of vehicles, where the Society of Automotive Engineers defined six levels from 0 (no automation) to 5 (full automation). But here’s the problem: these aren’t actually levels of autonomy. They’re levels of automation sophistication.

A so-called “Level 4 autonomous vehicle” isn’t 80% autonomous. It’s 0% autonomous and 100% automated within a defined operational domain. The car doesn’t decide where to go, whether the trip is necessary, or whether driving conditions warrant postponing travel. It executes a predefined set of instructions within predetermined constraints, using sensors and algorithms to navigate from Point A to Point B. When it encounters a situation outside its programmed parameters, such as an unusual road closure, an ambiguous traffic scenario, or a moral dilemma, it doesn’t exercise autonomous judgment. It either follows its predetermined protocol or hands control back to a human.

This isn’t a criticism of the technology, which is genuinely impressive. It’s a criticism of the language we use to describe it. By calling these systems “autonomous,” we create false expectations about their capabilities and obscure their actual limitations. We suggest they have agency they don’t possess and judgment they can’t exercise.

The same pattern repeats across AI applications. An “autonomous trading system” doesn’t independently decide whether to participate in a market or question the ethics of high-frequency trading. An “autonomous drone” doesn’t choose its own missions or evaluate whether those missions align with broader strategic goals. Your car is not suddenly going to decide it’s hungry and change course to the nearest station.

There are no levels of autonomy in these systems because the systems haven’t reached autonomy yet. You cannot have gradations of something you haven’t achieved. It’s like claiming “levels of flight” for a machine that hasn’t left the ground. Theoretical levels of autonomy could only exist once a system crosses the threshold into genuine autonomy. Until that happens, what we’re measuring is the level of automation sophistication, not degrees of autonomy. Better sensors, faster processors, and more training data don’t move you up an autonomy scale. They make you better automated. Autonomy isn’t a spectrum you gradually ascend. It’s a categorically different type of capability that current systems simply don’t possess, and no amount of incremental improvement in automation gets you there.

The Marketing Capture of Autonomy

So why has “autonomous” become the descriptor of choice for advanced AI systems? Because it sells.

Autonomy implies agency, intelligence, and capability in ways that “automated” doesn’t. An “autonomous agent” sounds sophisticated, cutting-edge, even slightly magical. An “automated agent” sounds like what it actually is: a programmed system following instructions. The former attracts investment, generates press coverage, and commands premium pricing. The latter sounds like enterprise software from 2010.

This marketing capture has real consequences. It shapes how venture capitalists evaluate companies, how executives justify technology investments, how regulators approach policy, and how the public understands AI capabilities. When a company claims to offer “autonomous AI agents,” they’re making an implicit promise about capabilities that exceed mere automation, and in almost every case, that promise is false.

The gold standard coming from Boston Consulting Group (BCG) above, that uses both terms to hype reports.

The industry has recognized this problem in some domains. Notice that automotive manufacturers have largely moved away from “autonomous vehicles” in favor of “self-driving cars” or “advanced driver assistance systems.” This isn’t just rebranding. There are deep legal and practical necessities that require precision in our language about technology.

When you call a car “autonomous,” you imply it bears responsibility for its actions. When accidents happen, that language becomes a liability. The shift in terminology reflects a grudging acknowledgment that these systems aren’t actually autonomous, even if they’re impressively automated.

But in other AI domains, the autonomy mythology persists and even accelerates. “Autonomous agents” has become a premium buzzword, with companies rushing to claim they’ve achieved this milestone. They haven’t. They’ve built sophisticated automation that can handle more variables and operate in less structured environments than previous systems. That’s an achievement worth celebrating, but still, it’s not autonomy.

The Anthropomorphism Trap

The persistent mislabeling of AI systems as autonomous isn’t just a marketing problem. When systems exhibit complex behavior that resembles human decision-making, we instinctively attribute human-like qualities to them: intention, understanding, agency.

A language model that generates coherent, contextually appropriate responses must “understand” what it’s saying, right? A chess engine that beats grandmasters must be “thinking” about strategy, right? A recommendation system that predicts our preferences must “know” us, right?

These systems are executing statistical patterns learned from vast datasets. They’re finding mathematical relationships in high-dimensional spaces. They’re optimizing loss functions. What looks like understanding is pattern matching. What seems like thinking is computation. What feels like knowledge is correlation.

This anthropomorphic attribution becomes particularly dangerous when we start making decisions as if these attributions were accurate. If we believe an AI system “understands” the nuances of medical diagnosis, we might trust its recommendations without appropriate oversight. If we think an AI “knows” how to evaluate loan applications fairly, we might not scrutinize its decisions for bias. If we assume an AI can “think” through the implications of its actions, we might give it more autonomy than is safe or appropriate.

Legal and Social Consequences of the Autonomy Myth

The language we use to describe AI systems has concrete legal and social implications. When we call systems “autonomous,” we create ambiguity about accountability, responsibility, and liability that our legal and social frameworks aren’t equipped to handle.

Consider liability when an AI system causes harm. If the system is merely automated, responsibility clearly lies with the humans who designed, deployed, and operated it. They set the parameters, chose the training data, defined the optimization functions, and decided where to deploy the system. But if the system is genuinely autonomous, if it exercises independent judgment and makes decisions its creators couldn’t predict or control, then the question of responsibility becomes far murkier.

When a Tesla on “Autopilot” (itself a problematic term suggesting autonomy) crashes, who’s liable? The driver who was supposed to be supervising? The company that marketed the system? The engineers who trained the model? The ambiguity has spawned countless lawsuits and regulatory investigations. Now multiply this across every domain where AI systems operate: medical diagnosis, financial trading, content moderation, criminal justice, and military applications.

The autonomy myth also shapes policy in dangerous ways. If policymakers believe AI systems are truly autonomous, they might craft regulations that treat these systems as independent entities with rights and responsibilities. They might create legal frameworks that recognize “AI decision-making” as a distinct category from human decision-making. They might establish accountability structures that diffuse responsibility across human and machine actors, ultimately leaving no one fully accountable.

We’re already seeing this play out. Some jurisdictions are debating whether AI systems should have legal personhood. Companies are arguing they can’t be held fully responsible for AI decisions because the systems are “autonomous.” Regulators are struggling to determine where to draw lines around liability and oversight. All of this stems from accepting, even implicitly, the premise that these systems possess autonomy they don’t have.

Beyond legal implications, the autonomy myth affects social dynamics and power structures. When companies deploy “autonomous systems,” they often use that language to distance themselves from the consequences of those systems’ actions. “The algorithm decided” becomes a way to avoid accountability. “The AI is autonomous” becomes an excuse for why bias, errors, or harmful outcomes can’t be fixed through simple policy changes.

This linguistic sleight of hand is particularly insidious because it obscures the human decisions embedded in every AI system: what data to train on, what objectives to optimize for, what tradeoffs to accept, what risks to tolerate, what use cases to enable. Every AI system embodies the values, biases, and priorities of its creators. Calling these systems autonomous obscures this reality and makes it harder to hold the right people accountable.

The Path Forward: Honest Language for Capable Systems

Do any of these arguments diminish the impressive capabilities of modern AI systems? Not at all. The achievements in machine learning, natural language processing, computer vision, and autonomous decision-making are genuinely remarkable. We’ve built systems that can accomplish tasks that seemed impossible a decade ago.

But impressive automation is still automation. Sophisticated execution within defined parameters is still not autonomous decision-making. Pattern recognition at scale remains poorly understood. And mislabeling these capabilities makes them more dangerous.

This requires honest language about what we’re building. Replace “autonomous agents” with “automated assistants.” Trade “self-driving cars” for “advanced driver assistance systems” or “highly automated vehicles.” Speak of “AI-augmented decision-making” rather than “AI decision-makers.” These are accurate descriptions that set appropriate expectations and maintain accountability.

The AI industry stands at a crossroads. We can continue down the path of hype and inflated claims, calling every advancement in automation a step toward autonomy and every impressive feat of pattern recognition a form of intelligence. This path leads to misaligned expectations, regulatory overreach or underreach, and eventual backlash when systems inevitably fail to live up to their anthropomorphized marketing.

Or we can choose honest language that accurately represents both the remarkable capabilities and the fundamental limitations of current systems. This path builds appropriate trust, enables effective regulation, and creates space for the technology to deliver real value without promising miracles it can’t provide.

Autonomy remains a theoretical destination, not a current reality. Until we acknowledge that distinction, we’re building our AI future on a foundation of linguistic confusion and anthropomorphic wishful thinking. And that’s a terrible way to make anything that matters.

If you find this content valuable, please share it with your network.

🍊 Follow me for daily insights.

🍓 Schedule a free call to start your AI Transformation.

🍐 Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller “Infailible” and “Customer Transformation,” and has been recognized as one of the Top 40 Global Gurus for Customer Experience.