Reasoning Revolution and the Hierarchical Model (HRM)

As artificial intelligence continues to evolve, most advances have felt incremental, impressive but predictable extensions of existing paradigms. The Transformer architecture that powers ChatGPT, Claude, and other leading language models represents a scaling of concepts introduced in 2017. While these systems have achieved remarkable capabilities, they have also hit fundamental computational walls that no amount of parameter scaling can overcome. Today, that is changing with the introduction of the Hierarchical Reasoning Model (HRM) by Sapient Intelligence, a breakthrough that not only improves AI performance but also fundamentally reimagines how machines think. Sapient also open-sourced their Hierarchical Reasoning Model (HRM) along with a paper to explain more.

The Chain-of-Thought Ceiling

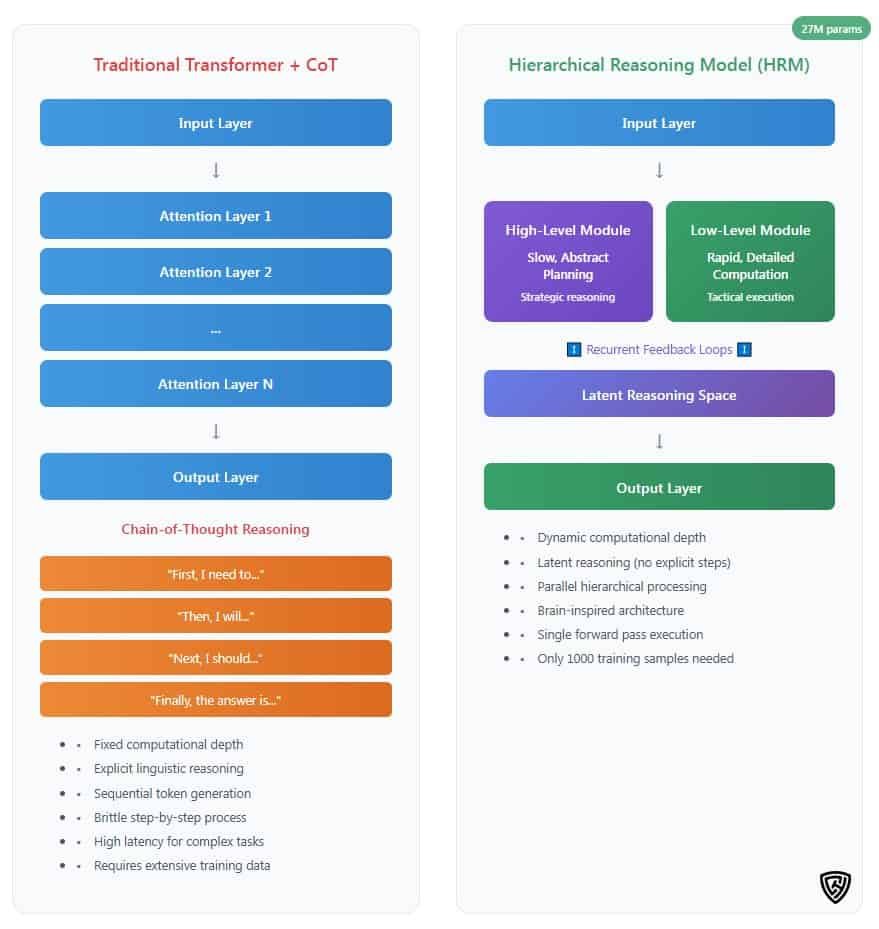

Current large language models rely heavily on Chain-of-Thought (CoT) reasoning, a technique that forces AI to externalize its thinking process into sequential text tokens. When you ask GPT-4 to solve a complex math problem, it “thinks out loud,” breaking the problem into verbal steps. This approach has been successful but comes with severe limitations that have become increasingly apparent as we push these systems toward more complex reasoning tasks.

CoT reasoning is fundamentally brittle. Like following a recipe where a single missed step ruins the entire dish, one incorrect intermediate step can derail the reasoning process as a whole. The method also suffers from what researchers call “token-level tethering,” reasoning becomes constrained by what can be expressed in language, limiting the model’s ability to engage in the kind of abstract, non-verbal computation that characterizes human thought at its deepest levels.

Perhaps most critically, CoT requires vast amounts of training data and generates excessive tokens for complex tasks, creating computational bottlenecks that make real-time reasoning prohibitively expensive. As AI systems are deployed at scale, these efficiency constraints become business-critical limitations.

The Computational Complexity Trap

The deeper issue lies in the fundamental architecture of current AI systems. Despite being called “deep learning,” modern transformers are paradoxically shallow in their computational structure. Their fixed depth places them in limited computational complexity classes, preventing them from solving problems that require polynomial time complexity. Simply put, they cannot execute the kind of algorithmic reasoning necessary for complex planning or symbolic manipulation tasks in a truly end-to-end manner.

This manifests in fundamental performance gaps. When researchers tested transformer models on tasks like complex Sudoku puzzles, even profound models failed to achieve optimal performance, suggesting fundamental architectural constraints that scaling alone cannot overcome.

Enter the Hierarchical Reasoning Model

The HRM represents a paradigm shift inspired by neuroscience rather than linguistics. Instead of forcing reasoning into the constraints of language, HRM mimics the hierarchical, multi-timescale processing of the human brain. The model features two interdependent recurrent modules: a high-level system responsible for slow, abstract planning, and a low-level system handling rapid, detailed computations.

This brain-inspired architecture enables what researchers call “latent reasoning,” a type of computation that occurs within the model’s internal hidden state space rather than through explicit linguistic steps. This aligns with cognitive science research, which shows that much of human reasoning occurs below the threshold of verbal articulation, in abstract computational spaces that don’t map neatly onto language.

Efficiency Through Biological Inspiration

The results speak for themselves. With just 27 million parameters, a fraction of the size of leading language models, HRM achieves exceptional performance on complex reasoning tasks using only 1,000 training samples. The model operates without pre-training or Chain-of-Thought data and still reaches near-perfect performance on challenging benchmarks, including complex Sudoku puzzles and optimal pathfinding in large mazes.

Most remarkably, HRM outperforms much larger models on the Abstraction and Reasoning Corpus (ARC), a key benchmark for measuring artificial general intelligence capabilities. This represents a fundamental efficiency breakthrough, suggesting that we’ve been optimizing the wrong variables in AI development.

Beyond Incremental Progress

What makes HRM genuinely revolutionary is its departure from the scaling paradigm that has dominated AI development. While the industry has focused on building ever-larger models with more parameters and training data, HRM demonstrates that architectural innovation can deliver superior performance with dramatically fewer resources.

This efficiency isn’t only academically engaging but also transformative for the practical deployment of AI. Smaller, more efficient models mean lower computational costs, reduced energy consumption, and the ability to run sophisticated reasoning systems on edge devices rather than requiring massive cloud infrastructure.

The Path to Agentic AI

HRM’s implications extend beyond standalone reasoning tasks. The model’s ability to conduct deep, multi-stage reasoning within its latent space makes it an ideal component for agentic AI systems.

Current AI agents often struggle with the computational overhead of explicit reasoning steps, limiting their real-time decision-making capabilities. HRM’s latent reasoning architecture could enable a smoother transition to enhance system logic and actions:

The updated process enables the reasoning component to operate efficiently within the agent’s cognitive architecture, rather than as an external linguistic process.

Redefining What It Means to Think

Perhaps most fundamentally, HRM challenges our assumptions about machine cognition. By moving beyond language-based reasoning toward latent computational processes, the model suggests that artificial thinking doesn’t need to mirror human linguistic thought patterns. Instead, it can develop its own forms of abstract reasoning that may ultimately prove more efficient and powerful than our verbal approximations of thought.

This represents a maturation of AI development, moving from systems that simulate human communication patterns toward systems that engage in genuine computational reasoning. The distinction matters because it opens possibilities for forms of machine intelligence that aren’t constrained by the limitations of human language and thought.

What HRM Means for AGI Progression

While HRM is a remarkable breakthrough, it represents one advancement within a much larger journey toward artificial general intelligence. AGI will not emerge from a single architectural innovation. Instead, it will be built through the integration of multiple core advancements such as reasoning, memory, adaptability, perception, and moral alignment, each addressing different dimensions of intelligence.

Mark my words: as HRM picks up steam, the hype machine will shift into overdrive, and many will proclaim it as the arrival of AGI. It is not. HRM contributes to the reasoning dimension of intelligence, but reasoning alone does not make a system autonomous or general. Without memory, self-reflection, goal-setting, and ethical grounding, it remains one core building block rather than the complete structure.

This is where frameworks like the Q-AGI model are critical. Q-AGI provides measurable benchmarks across six cognitive capabilities: reasoning, understanding, memory, learning, expression, and transfer. Using Q-AGI, we can evaluate HRM’s place in the bigger picture and avoid mistaking one advancement for the whole of general intelligence.

In this sense, HRM is best viewed as a cornerstone rather than the entire foundation. It equips future agentic systems with a reasoning engine capable of abstraction and generalization, but those systems will still need other core components before they can approximate the full spectrum of human-like intelligence.

The Road Ahead

The open-sourcing of HRM by Sapient Intelligence marks a pivotal moment in artificial intelligence development. For the first time in years, we have a genuinely novel architectural approach that delivers dramatic efficiency improvements alongside enhanced capabilities.

As the AI community begins to explore and build upon HRM’s innovations, we’re likely to see rapid development of new architectures that combine the efficiency of hierarchical reasoning with the scale and capabilities of modern language models. The future of AI may not be about building ever-larger models, but about building smarter ones that think more like brains and less like sophisticated autocomplete systems.

The Hierarchical Reasoning Model not only represents progress in artificial intelligence but also represents evolution. And like all evolutionary leaps, its full implications will only become clear as the ecosystem adapts to this new form of machine cognition.

If you find this content valuable, please share it with your network.

🍊 Follow me for daily insights.

🍓 Schedule a free call to start your AI Transformation.

🍐 Book me to speak at your next event.

Chris Hood is an AI strategist and author of the #1 Amazon Best Seller “Infailible” and “Customer Transformation,” and has been recognized as one of the Top 40 Global Gurus for Customer Experience.